NetworkX - Quality and Evolution

In the third essay of this series, we will describe the Software Quality Assurance (SQA) processes applied by the project and analyse the source-code for quality. These processes ensure that any version of the package (including development versions) function as expected.

To recap, NetworkX is a python library for complex-network analysis, which has varied use-cases and stakeholders.

Overall Software Quality Processes

NetworkX makes the entire SQA process robust, using the following

- Version Control: NetworkX is open-source and resides on a GitHub repository. This means all code changes by various contributors are tracked and managed at a fine-grained level.

- Testing: NetworkX uses pytest to test all the implemented functionalities1.

- Continuous Integration (CI): GitHub Actions is used to automate various tests, style-checks, and builds for different platforms.

- Open-Source contribution: they use Github’s Issues to track and manage the community’s issues. The rich categorization and labelling along with statistics of the users’ needs, ensures that the developers always understand the users’ experience with the software.

Continuous Integration Processes

The recommended process from designing a new feature/fix, to it being integrated or being released is detailed in the contribution guide2. From our experience of getting a Pull Request PR approved), here is the summarised sequence of events.

Step 1: Designing a solution

A contributor should indicate they want to work on an Issue. This enables rich feedback from the developers before you start designing a solution and coding. Branch from the main for coding the solution with an appropriate name, e.g. bugfix-issue-0420-patch-3. This ensures the solution can be tested independently and can be merged back into main without conflicts. Follow the official coding guidelines2: ensure tests, documentation, code-style checks, and code decorations are done as required.

Step 2: Create a PR

Once the solution seems complete create a PR. This will enable the following:

- Checking that the changes can be merged without conflict

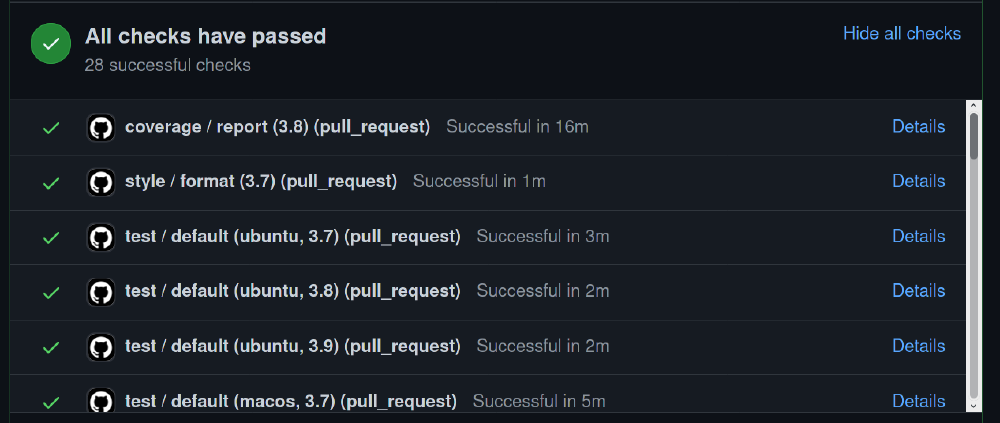

Figure: Example from the PR. [source]

- The automated CI pipeline ensures the merge will work on different platforms and all tests pass. It checks the code-style using pylint. Runs the testing module for NetworkX on various virtual-machines with different distributions of MacOS, Ubuntu and Windows. It also checks that the test coverage is good.

Figure: Example from the PR. [source]

- One or more of the core developers will review your changes and recommend more changes from different perspectives: software engineering, algorithmic complexity, testing, maintainability, etc

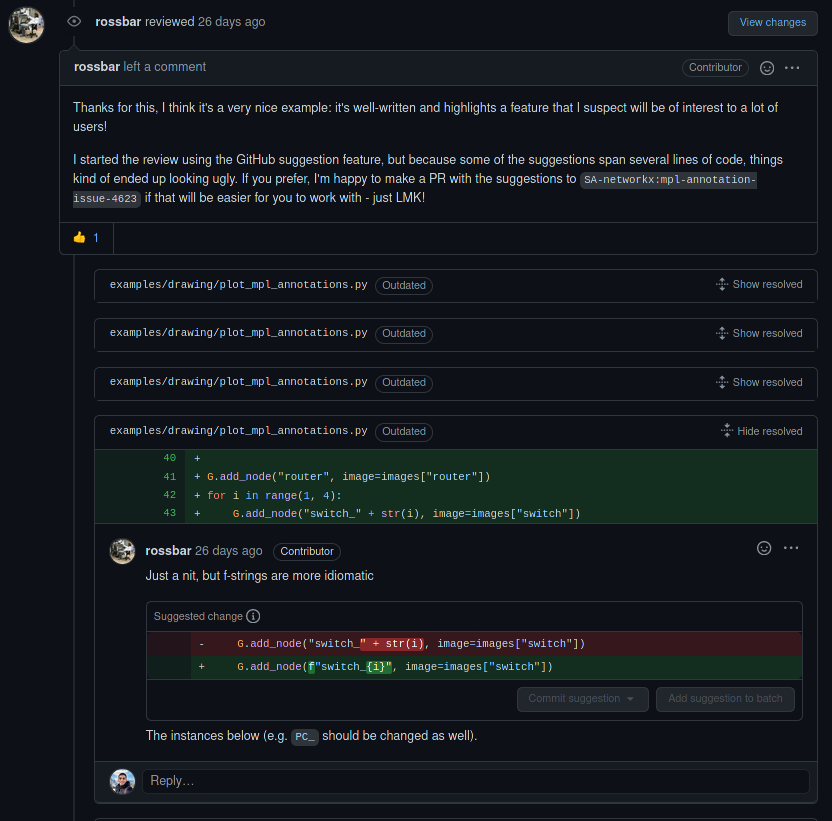

Figure: Example from the PR: code-style changes. [source]

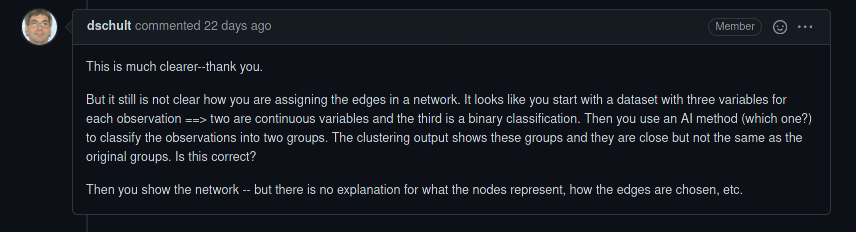

Figure: Example from the PR: rich improvements. [source]

Step 3: Final Steps Modifications

After addressing various concerns on the PR, if everything looks good and works then the merge will be approved. The merge will not necessarily be immediately deployed. For instance, an example may be part of a series and needs to wait for other contributions, whereas a bugfix that is intended to fix the latest release will be deployed immediately.

Test Processes and the role of test coverage

NetworkX uses the pytest framework, which provides the environment to quickly generate tests for the source-code. NetworkX tests can be run easily with the command PYTHONPATH=. pytest networkx, which tests over 20,000 statements. In CONTRIBUTING.rst2, the maintainers of the project have clearly stated:

The test suite has to pass before a pull request can be merged, and tests should be added to cover any modifications to the code base.

NetworkX uses unit tests, which are used to test standalone “units” of code. As mentioned in the previous blog3, NetworkX is primarily composed of distinct functions for many components. The functional style allows for more straightforward unit testing, as each function can be tested in isolation.

After running pytest with code coverage, we see that the coverage of NetworkX is very high, at 94%:

Stmts Miss Branch BrPart Cover

-----------------------------------------------

26303 1268 13418 623 94%

However, coverage is not always the best metric to minimize errors. Although new functionality must be tested, this does not necessarily mean that the quality of the test cases is always high. Contributors may see testing as a chore, and not focus so much effort on making them realistic to the system usage, which minimizes their effectiveness at reducing errors. For example, the shortest path graph algorithm may have edge cases that are not handled on specific graph types (e.g a directed graph).

That being said, generally, testing is of a good quality in NetworkX and is monitored by the maintainers of the project, as can be seen in the following issues: (#4463, #4647, #4144)

Hotspot Components from the past

NetworkX follows the Model-View-Controller architecture3 for the core functional elements. The other problem-specific functionality is horizontally extendable through independent functions. This means certain functionality is coded by domain-experts who need not necessarily be good software engineers. These portions of the code become primary candidates for hotspots.

To analyse the hotspot components we will be using CodeScene which offers dynamic analysis of code-repositories by providing various metrics and insights. We will primarily utilize the code analyzer and hotspot map. CodeScene identifies the hotspots in the following way45:

- It goes through each file and measures the lines of code with the number of associated changes/commits.

- Identifies code health for each file by applying rules describing good code practices.

- Analyses complexity rating by the number of nested control statements.

- And identifies the number of changes per defined function.

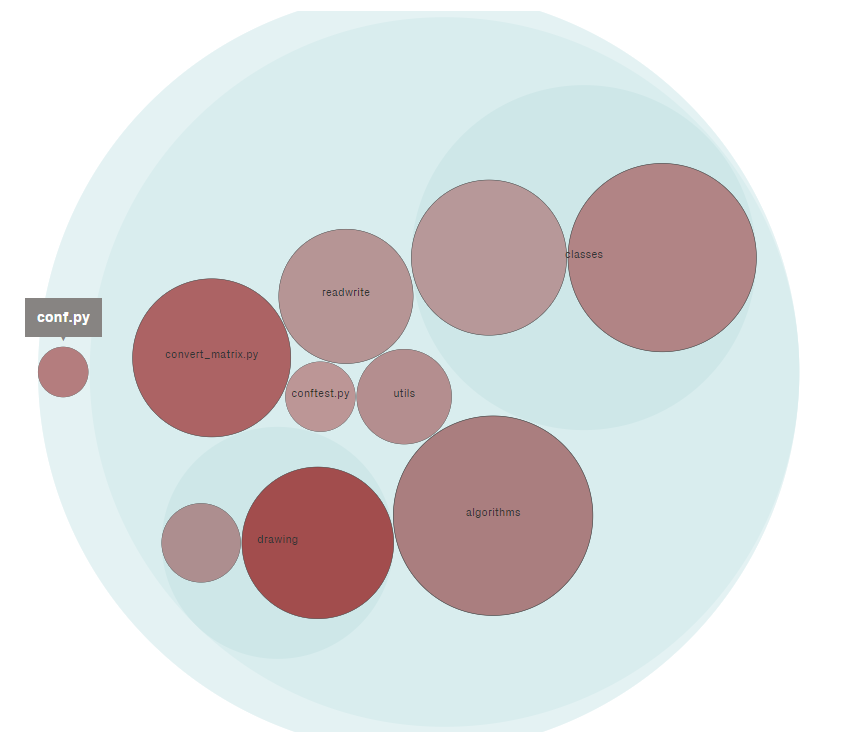

In order to reduce the scope of analysis and highlight the most prominent hotspots, we will be looking only at those hotspots which have change frequency higher than 50%, meaning that resulting hotspots will have 50% of all changes ever committed to the project. The figure below shows the resulting plot.

Figure: Hotspot relevance map: size indicates the complexity and colour-contrast the change frequency. Light blue circles indicate folders/modules. Some folders contain only one relevant hotspot after filtering

Now, to correctly identify the troublesome hotspots we need to understand that it is possible that retrieved files are not really a hotspot, e.g. a documentation file might be incorrectly identified due to frequent changes. As such, we will further filter hotspots based on the purpose of the file, its content, complexity rating and code health score. Thus, we identified the following as hotspot files: algorithms.weighted.py, drawing.nx_pylab.py, convert_matrix.py, readwrite.graphml.py, classes.function.py and classes.graph.py.

Hotspot identification allows developers to find inefficient, costly, and possibly difficult to maintain code. These are potential targets for refactoring in order to prevent expensive changes in the future. Regarding NetworkX, we can see that identified hotspots are large code files with multiple functions which are experiencing changes ever so often for the past seven years.

Code quality & hotspots

It is important to know what are the reasons behind the hotspots and if there is a way to not only mitigate them but also improve overall code quality. By using the insights gained from CodeScene analysis we know that all of the hotspot-files have more than 1000 lines of code which in itself is a bad practice and unnecessarily complicates code, it’s better to introduce extract classes and separate them than have a single large class 6. Then we can see that all the hotspots have either long method or complex method issues identified by the number of code lines and high cyclomatic complexity rating, respectively 7.

Considering more serious concerns, we can find a “Bumpy Road” issue in every hotspot with the majority of them having “Deep Nested Complexity” which indicates a high number of nested control structures (conditional and loop statements) within one or multiple functions (current threshold is >=4 nested structures). To mitigate this problem “Bumpy Roads” should be mitigated first to correctly encapsulate and possibly apply function extraction, then high complexity can be solved by refactoring the control statements 7.

Hotspots weighted.py and convert_matrix.py have a “Brain Method” issue. Primarily, “Brain Methods” are large and complex functions that centralize the behaviour of the module and violate the Single Responsibility Principle - the more complex the function, the lower the code health. In order to mitigate this issue, the function requires heavy refactoring by identifying the different responsibilities of the function and extracting them into separate functions 8. These modules have the lowest code health score (3.51 and 4.0 on a scale of 10) among all other hotspots, while the rest have an average score of 7.55.

The Quality Culture at NetworkX

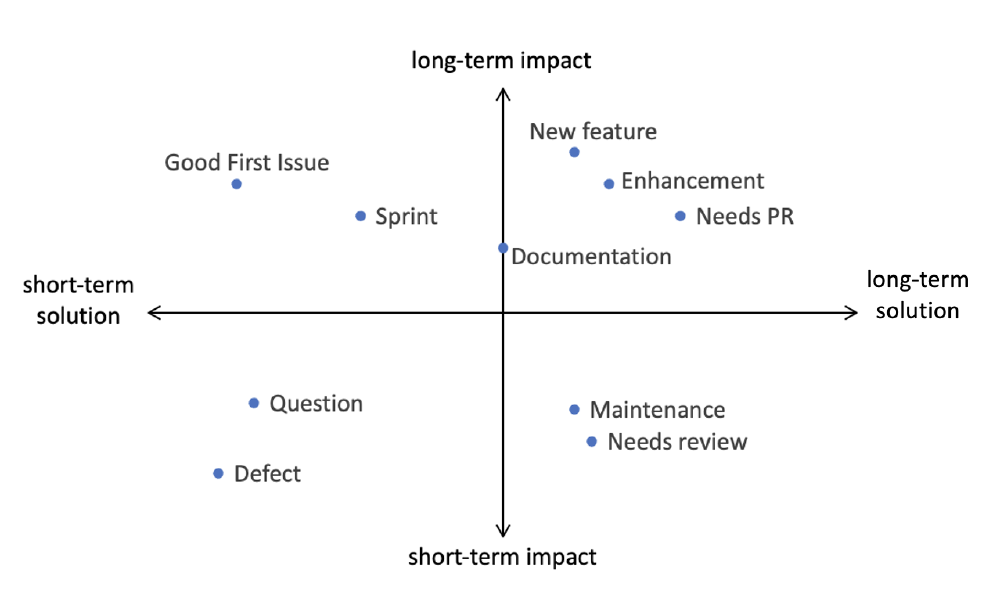

In order to illustrate the quality culture present at NetworkX, we created a quadrant chart to illustrate the distribution of the ten most active GitHub Issue and PR labels in two dimensions, solution and impact, to explain the quality culture under short-term and long-term strategies.

Figure: Quadrant chart of issue/PR labels

Each quadrant in this figure shows the property of labels and for each axis, we have defined the following:

- A short-term solution means that these issues or PRs can be resolved in a short period (one day of work maximum), such as a simple bugfix. While a long-term solution indicates that the issues/PRs will take a longer period of time (longer than a day of work), such as a new feature.

- A short-term impact issue/PR indicates that on resolving the issue, it is likely to have no long-term effects and lead to further issues, an example would be a simple translation addition. A long-term impact issue will have greater knock-on effects in the long-term, and could lead to further issues. An example of this would be adding a new complex graph type.

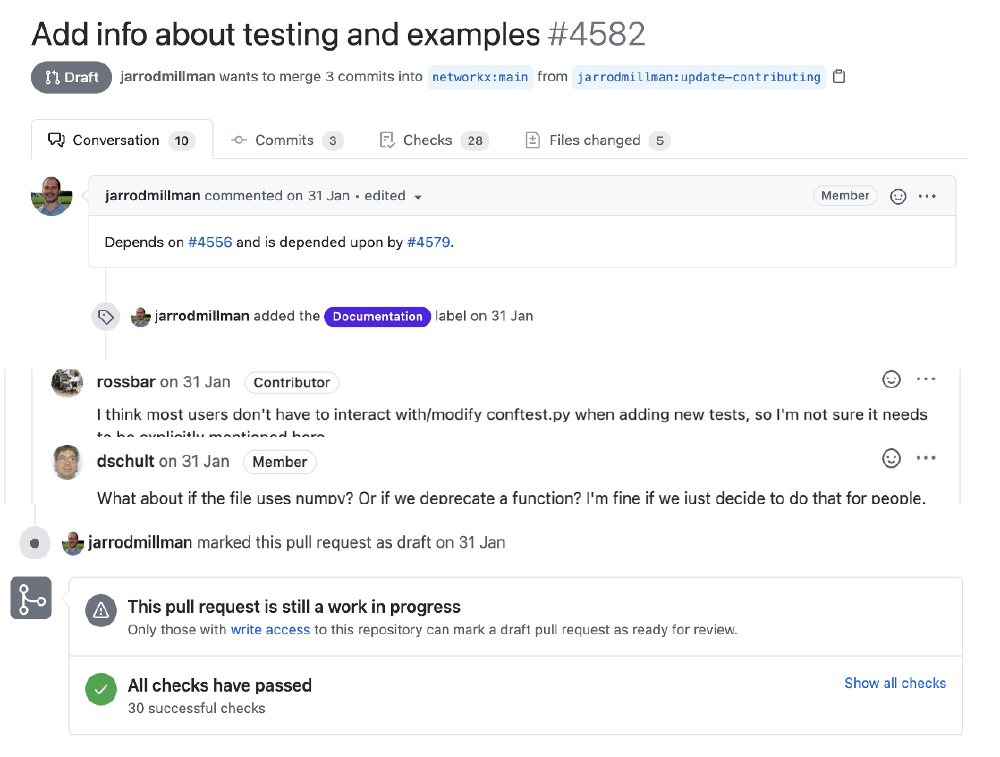

On investigating “Documentation” labelled issues/PRs in NetworkX, a large number of simple and actionable issues have been solved quickly and efficiently. This is a testament to the quality culture at NetworkX. However, a small portion of issues remain open, which mostly relate to more detailed documentation, with long-term goals and higher level discussion. One example of this is in PR #4582, where the initial solution was created quickly by the assigned developer, but further additions were brought up by the reviewers related to this solution. As a result, we would describe this as a short-term solution with long-term impact.

Figure: PR#4582 labelled as Documentation

Technical Debt

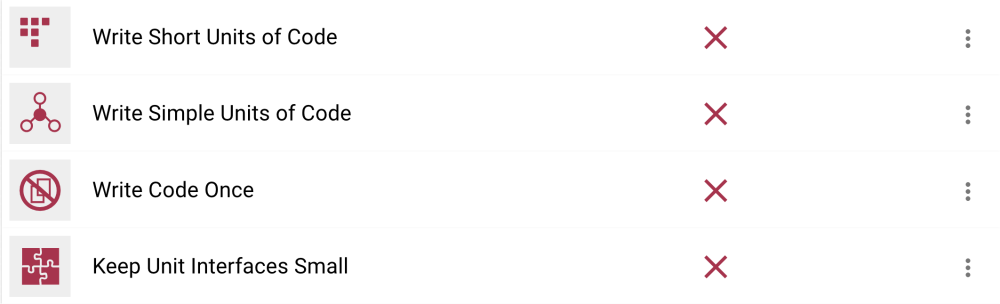

Technical debt refers to the long term “debt” incurred by writing less maintainable code. Thus, for many software projects, avoiding such a technical debt is very important9. In the case of NetworkX, insights into the technical debt can be gained from hotspot and code quality analysis. By utilising Better Code Hub on the NetworkX project, we can quickly see various factors that relate negatively to code quality.

Figure: Failed quality evaluations

Among the code quality deficiencies, is the issue of not “writing code once”, however, many of these issues are a result of duplicated comments.

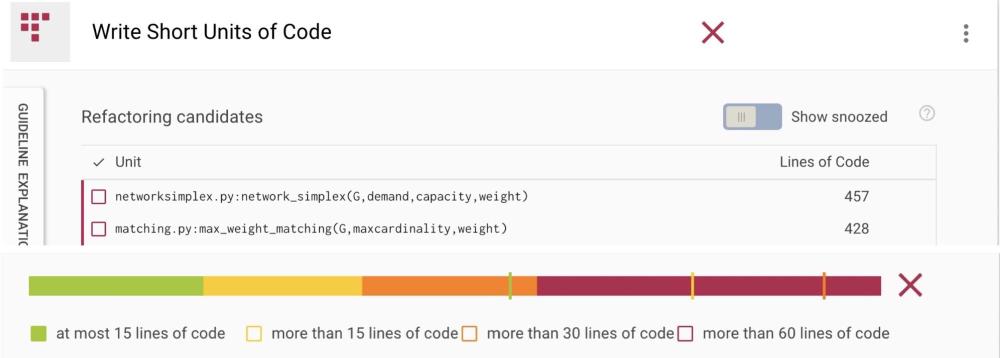

Another deficiency within the package is not writing short units of code. This is mainly due to developers not grouping related parameters of a graph into objects, which is a common practice for graph methods.

In general, the issue of writing long units of code is because it negatively affects maintainability. For example, if an algorithm that has been written in a long unit of code needs to be updated in the future, it can be hard for developers to decipher the logical flow.

Figure: Distribution of short units of code

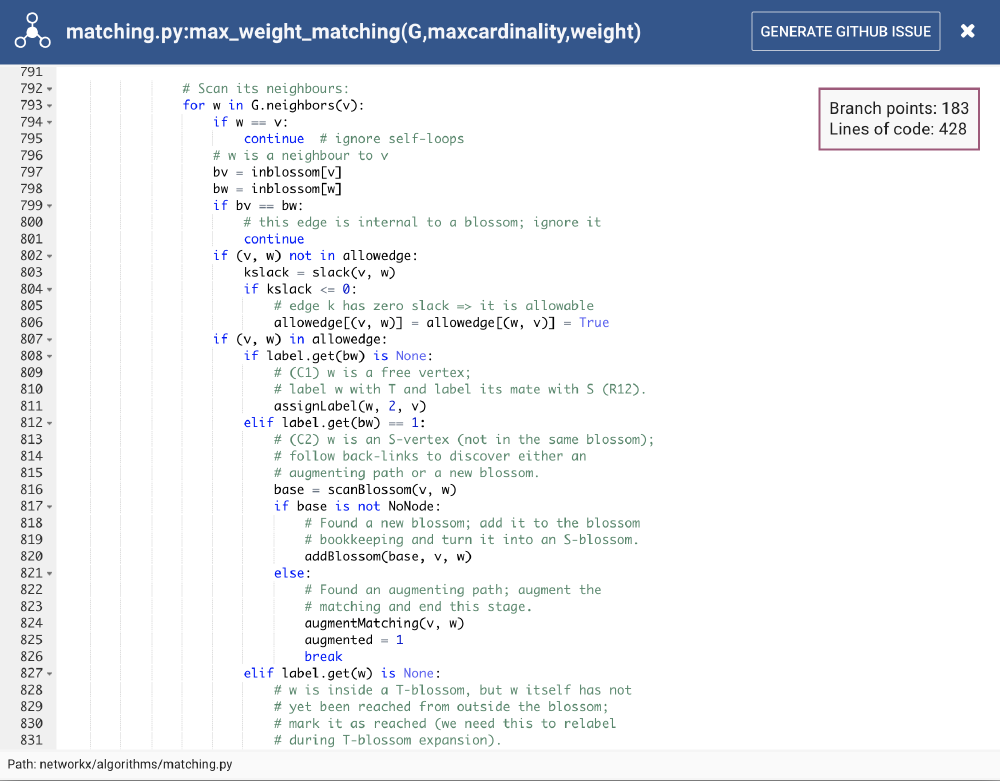

On closer inspection, BCH detects the matching.py file as an example that contains long units of code. In this file, the whole logical structure could be influenced by even the smallest changes. Furthermore, the file was marked as a hotspot, meaning that developers should be especially cautious with respect to code quality in these files, as they are often adapted.

Figure:

Code quality “write simple units of code” - Complex level of branch points in matching.py

Conclusion

We have thus seen the process undertaken by NetworkX for Software Quality Assurance. We have also analysed the code, using third-party tools, to understand other issues related to the quality.

References

-

CodeScene, The Bumpy Road Code Smell: Measuring Code Complexity by its Shape and Distribution ↩︎

-

Santiago Vidal, Iñaki berra, Santiago Zulliani, Claudia Marcos, and J. Andrés Díaz Pace. 2018. Assessing the Refactoring of Brain Methods. ACM Trans. Softw. Eng. Methodol. 27, 1, Article 2 (June 2018), 43 pages. DOI:https://doi.org/10.1145/3191314] ↩︎