OBS - Architectural Decisions

In our last essay, we talked about the product vision of OBS Studio, which is a software for live streaming and recording, and this essay focuses more on the underlying architecture of OBS. The OBS community carried out a code refactoring in 2016, in order to adapt to the use of multiple platforms. After the refactoring, the code can now be viewed on OBS Studio Github. The objectives of the new architecture are: make it multi-platform, separate the application from the core, and easier to extend and simplify complex systems1. In this essay, we will analyze OBS Studio from all aspects of the architecture.

Main design trend

After the refactoring of OBS Studio, developers first extract the framework and main API into libobs, and the other functions are defined as plug-ins, so the main design trend that is used in OBS Studio would be that of a plug-in. Almost all functionality is added through plug-in modules, which are typically dynamic libraries or scripts. The ability to capture and/or output video/audio, make a recording, output to an RTMP stream, encode in x264 are all examples of things that are accomplished via plug-in modules, which are written in plugins repository. Those plug-ins work for the libobs library. There are four libobs objects that you can make additional plug-ins for:

- Sources: are used to render video and/or audio on stream. Things such as capturing displays/games/audio, playing a video, showing an image, or playing audio. Sources can also be used to implement audio and video filters.

- Outputs: allow the ability to output the currently rendering audio/video. Streaming and recording are two common examples of outputs, but not the only types of outputs. Outputs can receive the raw data or receive encoded data.

- Encoders: are OBS-specific implementations of video/audio encoders, which are used with outputs that use encoders. x264, NVENC, Quicksync are examples of encoder implementations.

- Services: are custom implementations of streaming services, which are used with outputs that stream. For example, you could have a custom implementation for streaming to Twitch, and another for YouTube to allow the ability to log in and use their APIs to do things such as get the RTMP servers or control the channel.2

These base objects of libobs are the backbone of OBS Studio. The use of plug-ins allows for modularity so that custom additions can be made. It also provides extensibility and scalability, making it a very solid code base for the future.

Containers view

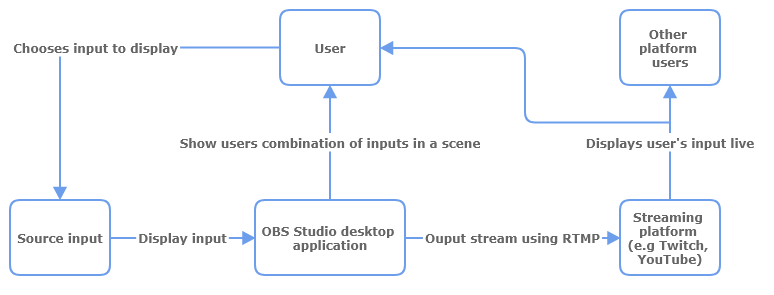

As an offline client-side desktop application, the source inputs that users give in are transformed into a scene and displayed back to the users. Another option is to live stream this scene onto a platform. So the application is able to connect to a server using RTMP and through that platform, the input is again displayed back to the user and possibly other users. In figure 1, we see a low-level containers view for OBS Studio.

Figure: Figure1: Low-level container diagram for OBS Studio

Components view

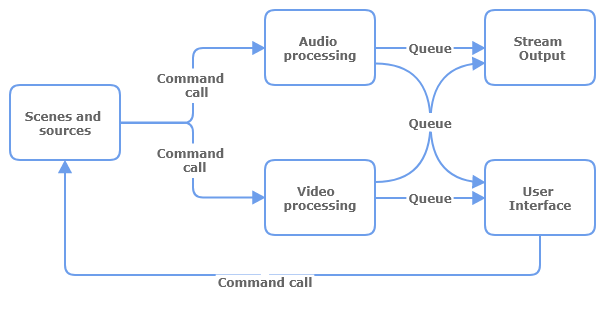

As a software application for video processing and live streaming, the main components of OBS Studio can be divided into the user interface, video/audio sources, video processing, audio processing, and stream services. Figure 2 shows the main components of OBS Studio and their inter-dependencies.

Figure:

Figure2: Component and connector diagram for OBS Studio

User interface is the frontend of OBS. The display of the video takes up the largest area of the user interface, which is used for display/preview panes. All the other functions like the scene and source settings are shown in the user interface as well.

Sources and scenes are where to set up the stream layout, add webcams, or any other devices or media that you want in the output. Any video/audio device or a single scene is able to apply filters customized by users.

The video processing pipeline is run from two threads: one is a graphics rendering thread to preview displays and the final mix, the other one is for video encoding and output. Since the main capability of OBS Studio is video/audio processing and output, graphics rendering is an important component of OBS Studio. All of the videos from sources and the customized filters need rendering, OBS Studio encapsulated Direct3D and OpenGL as graphics rendering APIs. 3

As for the capability of live streaming, it basically transmits the processed video and audio to the streaming module, thus, stream output services are the component that achieves live streaming output for specific streaming protocol, such as RTMP.

Connectors view

As shown in figure 2, the main connectors among different components are command calls and queues. In OBS, various capabilities are achieved by different functions, thus the most common connectors are function calls, components call each other by calling specific functions. Videos are processed by frames, thus for video output, the connector between video processing and output is a queue of raw frames. If the output takes both video and audio, it will put the packets in an interleave queue to ensure encoded packets are sent in monotonic timestamp order3.

Development view

Source code structure

The code of OBS Studio has 6 main parts4:

- libobs: Here is the core code of the project. It defines the project framework and core API, mainly written in C.

- UI: It is the interface code, using the C++ Qt framework to develop an interface suitable for the three major platforms(Windows, Mac os, Linux).

- plugins: It is the plugin code, which can be independently compiled into DLL (Windows platform) or so (UNIX platform), including Source (screen recording input source), Output, Service (various streaming services), etc. are all defined as plug-ins.

- libobs-d3d11: It is a graphics subsystem based on D3D, mainly used in Windows systems.

- libobs-opengl: It is a graphics subsystem based on OpenGL, mainly used in Unix systems.

- libobs-winrt: It is the code for screen capture in Windows systems.

Module structure

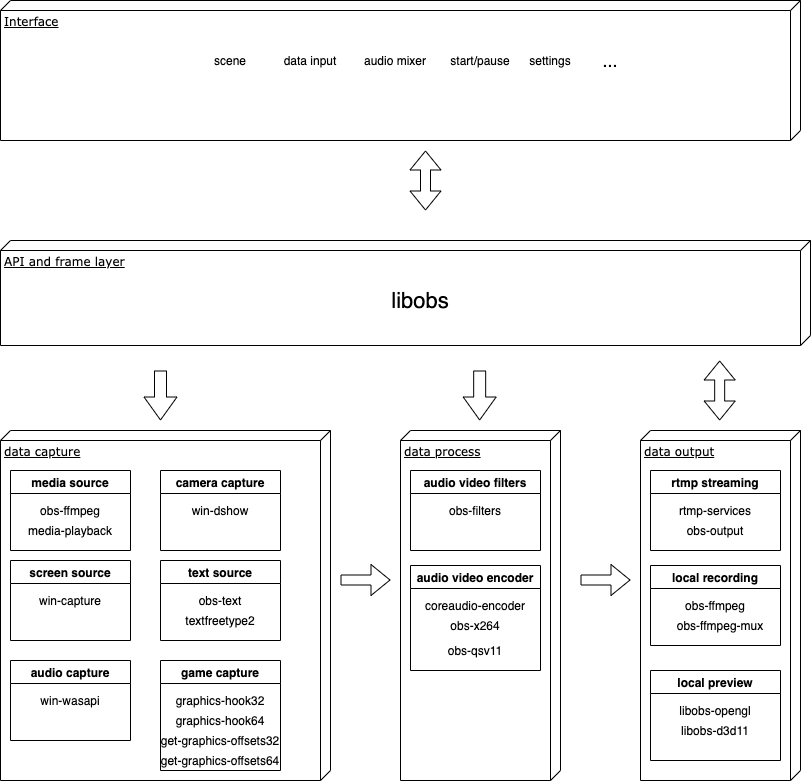

In the whole system, the interface part is supported by Qt, which interacts with the underlying API module through the core module. The API modules can be roughly divided into several parts: data input layer, data processing layer, and data output layer. After sorting out the modules and functions, they are summarized as follows:

- Data capture layer: mainly corresponds to the scenes and various sources in OBS Studio, which can be operated by the interface.

- Data processing layer: mainly encoding and special effects processing on output data.

- Data output layer: mainly corresponding to push streaming, local recording/playback recording, local rendering preview, etc.

The audio and video source can be captured by the corresponding module, processed by encoding and filtering, and then the processed source will be sent back to the interface for preview or sent to the streaming by RTMP.

Figure: Figure3: OBS Studio module structure

Run time view

As we talked about earlier in the first essay, the main purpose of OBS Studio is video recording and streaming. So in this part, we will take the streaming scenario in Windows as an example to analyze the interaction between different modules.

In the main thread, the main function will initiate OBS Studio by OBSApp.AppInit(), then the mainWindow will be created. After the initialization of the mainwindow, there will be four operations: load the modules, reset audio and reset video and video rendering.

- In the module loading, it will call

obs_load_all_modules()to iterate through each plugin. To load plugins, the functionLoadLibrary()is used in Windows andobs_module_load()will initialize the parameter of the plugins. - There will be three threads separately dealing with audio, video, and video rendering5. For audio and video, the thread will process data by frame and encode it with

libfdk_encode()andobs_x264_encode(). Then the encoded data will be sent to a data queue byadd_packet(). And if the user opens the streaming service, thesend_thread()will get packets from that queue and send them by RTMP. For video rendering,obs_video_thread()usetick_source()to iterate every frame source from the video queue, and send the rendered source to another queue in order to be used by the corresponding plugins.

Key quality attributes

In this section we will look at how the architects realized the key quality attributes. We will start by looking at the main key attribute that OBS Studio focuses on, which is maintainability. Benefit from the architecture of modular, it is easy to modify functions on certain aspects independently instead of affecting multiple libraries.

Furthermore, OBS is a self-contained package that everyone uses individually and thus scalability and reliability are not a concern. This is because there are no servers that you reliably need to access or that need to scale when the amount of users increases.

Of course, security is still an issue but OBS Studio will only be a threat when they can either add malicious code to OBS Studio which is highly unlikely since it is an open-source project. Or they enter your OBS Studio locally at which point they already have access to your PC anyway and thus don’t need OBS Studio to steal your information through OBS Studio.

Rate of delivery and testability is also really important and they kind of go hand in hand. That is because most issues are small bug fixes found by the community while using OBS Studio. And thus the most MR’s are either fixing those bugs, small enhancements, or code clean up. The latter two contribute to the maintainability again.

API design principles applied

The OBS Studio project has an extensive API, with which you can do anything you could do if you used the program but now automatically using a program. Furthermore, it is really easy to use since there is an extensive documentation 3, where they describe the data structures used as well as all the available methods. It is even split up into categories in case you are only interested in interacting with a certain part of OBS Studio. There are two parts of the API though, the API for C(++) which has access to everything. But there is also a smaller part for writing scripts in either Python or Lua 6.