Stellar - Quality and Evolution

Figure: Stellar Logo

Software quality is an essential factor in the success of a software project. It impacts the system’s reliability and, therefore, the user’s trust, which is especially important for virtual currency holding systems such as Stellar. In this essay, we will look at the processes that Stellar has to ensure a high standard of software quality. We will go into the technical details with their CI setup and testing system and highlight the most often-changed and impactful components. Finally, we will assess the technical debt 1 of the Stellar codebases and look at the culture around software quality to evaluate if the Stellar software quality is high enough to entrust our money to it.

Software quality processes

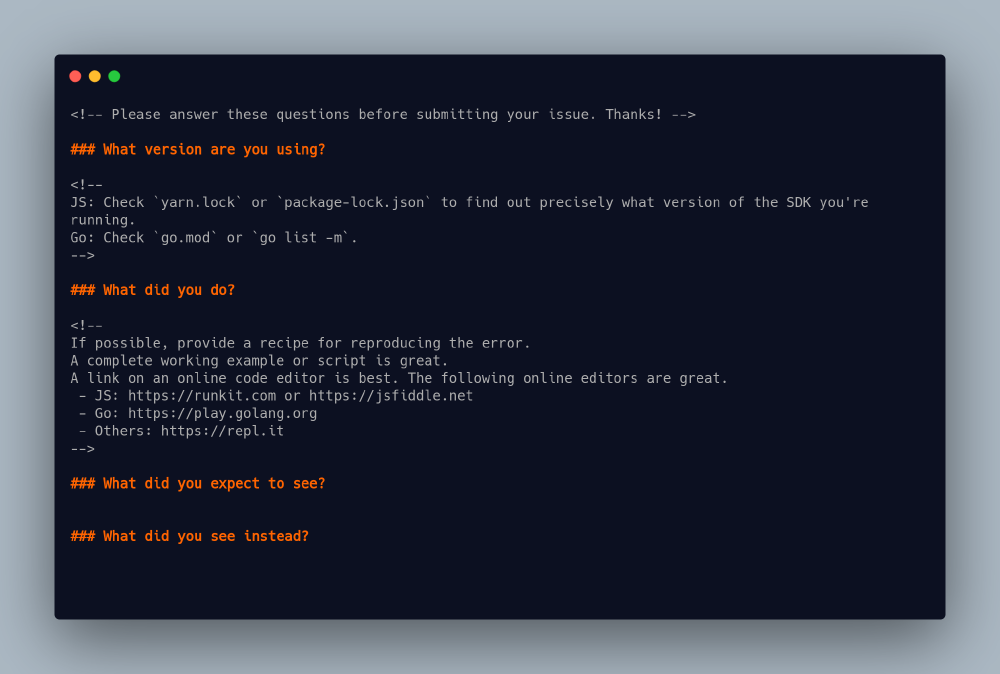

Software quality starts with the developers that write the software. Since Stellar is a large open-source project, many different people are working on the Stellar software. Thus it is vital to have suitable protocols in place to ensure a certain standard. Stellar has such protocols in the form of a contribution guide and a code of conduct. Stellar tries to ensure that these processes are followed by using Github issue and PR templates and upholding a high standard in quality culture, as discussed in the ‘Quality Culture’ section of this essay. 2

Figure: Example of the Github issue template for Horizon

Continuous Integration

Stellar has many code changes every day. 3 4 It is, therefore, somewhat likely that reviewers miss small mistakes in a code review. To catch as many mistakes as possible, Continuous Integration (CI) can run many different checks with each change to the codebase. These checks include static analysis checks such as linters and type-checkers and dynamic analysis checks performed by running tests directly on the codebase. Stellar’s suppliers of Continuous Integration are CircleCI 5 for Horizon and TravisCI 6 for Stellar Core.

The following checks are performed automatically by the CI as it is currently set up for Stellar’s codebases:

- Check if all formatting conforms to the coding standards using linters.

- Check if all dependencies are up-to-date

- Check if all deprecated fields are removed from the codebase

- Check if all generated code is updated

- Check if the build for Core succeeds

- Run the unit tests on Core and Horizon

- Run the integration tests on Horizon

Testing and Coverage

As is the case for every software project, testing is an important aspect of Stellar. Before one adds new code, one should perform tests to verify the correctness of the code. 9 This step provides an indication of the reliability of the system and can signal potential flaws in new code changes. Stellar uses testing in various ways to verify the correctness of newly added code, to search for breaks or leaks in the system and to verify the retention of proper operation of existing code. The kinds of tests currently in use for Stellar are unit tests, integration tests, fuzzing, and stress tests.

Unit tests test individual functions. Besides unit tests, Stellar also makes use of integration tests. These integration tests test the correctness of multiple functions interacting with each other. These include tests to add an account and tests where multiple transactions are happening simultaneously to check for concurrency issues. In the go repository, which includes the horizon service, the total code coverage is 49%, which is relatively low. The aim was to increase test coverage for specific packages within the repository 10, however, this coverage has gone down over the years.

In Stellar Core, tests also employ fuzzing. With fuzzing, the fuzzer generates many inputs, which, in the case of Stellar, are transactions and tries to find weaknesses in the system that the developer had not considered. With fuzzing, the Stellar team tries to prevent malicious transactions which might impact the system. Currently, a debate is ongoing on the addition of fuzzing to the Horizon system.11 Before implementation is possible, however, there are some security aspects that they need to consider. One of those is the visibility of fuzzing results. As fuzzing results show potential vulnerabilities, the results should probably only be visible to the horizon development team.

One last form of testing the Stellar system incorporates is stress testing. With stress testing, the tests simulate many transactions and allow for the evaluation of system performance under different loads. This test is not there to test code quality but rather to explore the limits of the system. Therefore it is not part of the development pipeline but a separate functionality that Stellar core instances can employ.

Hotspot Components

To get an idea of the activity in the Stellar codebase, we have performed a Hotspot analysis on the Core repository. Due to the significant amount of files in this repository, we perform an analysis on a directory/component level. One should note that we base this information on the state of the Stellar Core master branch at the time of writing.

For Stellar Core, we gathered information about the number of commits for all component directories. These commits represent changes to the source code. The total amount of changes and the number of changes over the last year indicate which components have seen relatively many activity or changes. You can find the results of gathering information on the repository in the table below. For convenience, we sorted entries on the ratio of recent commits and the total commits for that directory.

| Directory | Commits | Commits in last year | last year / total |

|---|---|---|---|

| invariant | 142 | 52 | 36.6% |

| test | 381 | 125 | 32.8% |

| transactions | 859 | 220 | 25.6% |

| work | 76 | 17 | 22.4% |

| catchup | 153 | 34 | 22.2% |

| herder | 859 | 180 | 21.0% |

| historywork | 110 | 23 | 20.9% |

| util | 433 | 76 | 17.6% |

| process | 106 | 16 | 15.1% |

| main | 1039 | 138 | 13.3% |

| crypto | 142 | 18 | 12.7% |

| ledger | 878 | 109 | 12.4% |

| bucket | 259 | 28 | 10.8% |

| overlay | 768 | 80 | 10.4% |

| database | 229 | 23 | 10.0% |

| history | 619 | 57 | 9.4% |

| scp | 299 | 22 | 7.4% |

| xdr | 237 | 17 | 7.2% |

| simulation | 307 | 17 | 5.5% |

In a previous post, we described that one of the pillars for the further development of Stellar was to support Stellar’s robustness and usability. Looking at the entries with a high amount of relative changes in the past year, we can see that the community has responded to this robustness goal with a lot of activity. The invariant directory contains a lot of functionality used to validate the system’s properties over time (think about the conservation of total Lumens in the system, for example). The second most relative amount of recent changes can be found in the test directory, which, as you may expect, contains tests. Therefore, the activity within these directories can be seen as the community trying to improve robustness by both property/state validation and testing, both of which seem like a good place for improvements to that end.

Another directory with both a relatively great amount of recent changes and a lot of changes over all, is the ‘transactions’ directory. Transactions, in Stellar Core, are the methodology for mutating the ledger. There are a lot of transactions within Stellar, each with a different purpose. There has been activity in the refactoring process of Stellar, and a lot of these refactorings also cause the need to update how transactions work/interact with the components. This can, therefore, also be seen as the community improving on code quality, which is another reasonable way to improve on the robustness of the system.

When looking at the entire history of the Stellar project, Core has seen the most changes in main, ledger, herder, transactions, overlay, and history, in that order. This is quite logical, as these parts represent the system’s central parts: apart from the components mentioned in the previous post, main handles booting and loading of configurations and tests. Thus it makes sense that these directories have received a significant amount of attention over the project’s history.

Regarding the future hotspots of the project, we can only look at this recent activity and the issues currently open and assigned. This leads us to believe that most activity on the short- to mid-term will not be focussed on a particular component but on further improving the product’s stability, as has been done in the past year. In particular, a lot of activity seems to revolve around the adaptation of fuzzing into the testing approach, further refactorings, and other code quality- and test improvements.

Technical Debt

Technical debt refers to the extra development cost of adding a feature caused by choosing a straightforward short term approach rather than a better long term approach 1. For example, this debt can be caused by confusing code structures or highly dependent code structure, which makes testing harder. Technical debt can be examined with static analysis tools or by the hand of a programmer; examples of issues where contributors discuss the technical debt of the system: 3196, 3111, 3447.

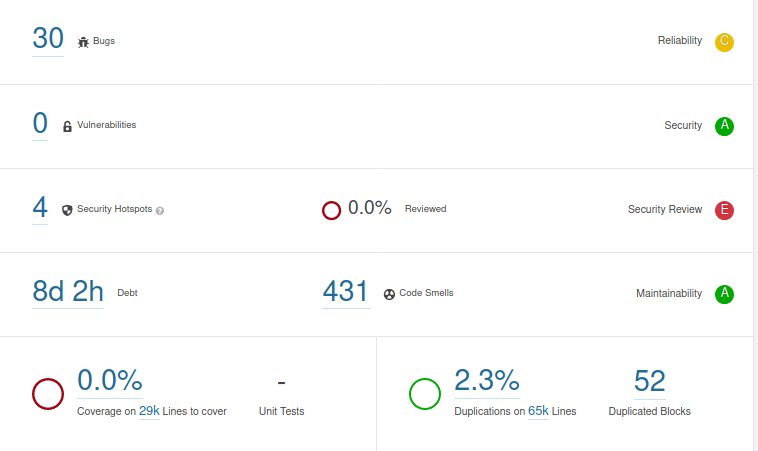

We used SonarQube12 to access the technical debt of the system. We analysed the Stellar-Go repository 13, which contains all the public Go code of the Stellar Development Foundation. According to SonarQube, it takes 8 days and 2 hours to solve all technical debt issues. This is equal to a debt ratio of 0.2% and results in an A rating of SonarQube. This technical debt increases to a debt of 23 days when the test code is included in the analysis.

The ‘bindata causes a big part, 1 day and 4 hours, of this technical debt.go’ file. This file converts the database migration files to manageable Go source code; due to its unique task, it is an essential file that cannot be refactored. This file also contains 32% of the code smells, therefore we would argue that the actual debt of the Stellar-Go repository is even better than the SonarQube analysis suggests.

Figure: SonarQube analysis of the Stellar-Go repository excluding the test files

Quality Culture

A good quality culture is an important element to obtain a high-quality codebase. Since Stellar is divided into multiple repositories, there is a difference between the project quality culture and repository-specific quality culture.

The quality culture of the project as a whole is very thorough, big changes need to be submitted as a proposal first. These proposals are divided into two groups, the Core Advancement Proposals (CAPs)14 and the Stellar Ecosystem Proposals (SEPs)15. Every proposal is reviewed in detail in the draft stage, then it is either accepted or denied by the SEP Team or CAP Core Team. The proposals also specify to which part of the system changes should be applied and to which repositories. Pull requests related to proposals also follow a specific naming protocol, for example, pull request 2419 and 2893 are part of a different repository but both relate to CAP 35.

Since the repositories have different purposes and responsibilities in the Stellar project, the quality culture also differs. When contributing to the Stellar Core, the changes need to follow specific guidelines. Specific code style is enforced and whenever a performance enhancement is submitted, the programmer needs to provide evidence of the improvement according to the performance evaluation document. Pull request 2034 is a great example of how thorough the review process of a performance-related pull request is.

The issues and merge request statistics show an active and reliable group of contributors. Last month in the Stellar Core, there were a total of 27 merged pull requests and 14 closed and opened issues. The Stellar Go repository has a similar-looking group of contributors and automatically generates Go Report Cards 16. These generated reports make it easy for contributors to review and enforce good code practices. Pull request 3435, issue 1343 and issue 2055 are great examples of the code, review and test practices in the Stellar Go repository.

References

-

Technical Debt explained, Referenced 17 Mar, https://en.wikipedia.org/wiki/Technical_debt ↩︎

-

Github Templates, Referenced 17 Mar, https://docs.github.com/en/github/building-a-strong-community/configuring-issue-templates-for-your-repository ↩︎

-

GitHub commit history for Core, Referenced 20 Mar, https://github.com/stellar/stellar-core/commits/master ↩︎

-

Github commit history for Horizon, Referenced 20 Mar, https://github.com/stellar/go/commits/master ↩︎

-

CircleCI, Referenced 17 Mar, https://circleci.com/ ↩︎

-

TravisCI, Referenced 17 Mar, https://www.travis-ci.com/ ↩︎

-

CI Config File, Referenced 17 Mar, https://github.com/stellar/go/blob/master/.circleci/config.yml ↩︎

-

CI Build for Core, Referenced 17 Mar, https://github.com/stellar/stellar-core/blob/master/ci-build.sh ↩︎

-

Stellar contributing guide, Referenced 18 Mar, https://github.com/stellar/docs/blob/master/CONTRIBUTING.md ↩︎

-

Github issue Add unit and race condition tests for ingest components, Referenced 22 Mar, https://github.com/stellar/go/issues/1343 ↩︎

-

Github issue Evaluate Gitlab CI Fuzz testing, Referenced 20 Mar, https://github.com/stellar/go/issues/3395 ↩︎

-

SonarQube, Referenced 21 Mar, https://www.sonarqube.org ↩︎

-

Stellar Go repository, Referenced 21 Mar, https://github.com/stellar/go ↩︎

-

Stellar Core Advancement Proposals, Referenced 22 Mar, https://github.com/stellar/stellar-protocol/blob/master/ecosystem/README.md ↩︎

-

Stellar Ecosystem Proposals, Referenced 22 Mar, https://github.com/stellar/stellar-protocol/blob/master/core/README.md ↩︎

-

Stellar Go Report Card, Referenced 22 Mar, https://goreportcard.com/report/github.com/stellar/go ↩︎