Jitsi is a fast growing software package, which requires a flexible development culture. Especially during the Covid-19 pandemic the demand for Jitsi Meet and similar web conferencing software has exploded. This forced rapid innovation and focus on scalability from the core team. Besides these recent developments, the Jitsi community has an established work flow. The core team decides the direction of the project and takes lead in implementing core features, and the community addresses and implements features that are less crucial. Where the core team tries to safeguard the overall quality of the project.

Software quality processes

To uphold a high code standard throughout the project Jitsi Meet uses several quality processes. The software quality processes can be split up into automated and manual quality processes. These will be described into further detail in this section:

Automated quality processes

For automated quality processes, Jitsi Meet has two systems in place:

-

The first process is the linting of the code. Linting is the process of analyzing the code for potential bugs and code style issues. This is in place for the React front-end of Jitsi Meet and helps to keep up the standard of the code.

-

The second process is the continuous integration, from now on called CI, that is executed each time a pull request is submitted to the repository. This CI consists of multiple stages, the first being a front-end build and check for linting issues that may have arisen while programming. The second stage is only executed when needed, and runs the unit tests that are present for the back-end. Once one of these stages fails, either due to the front-end not being able to build, the linter giving errors or one of the unit tests failing, the CI tests will fail. Meaning that the code cannot be merged to the master branch and can therefore not be included into the main product. The programmer that submitted this pull request will have to fix the issues that occurred and submit the code again.

One final automated quality process is Jitsi Meet torture1, which runs peak signal-to-noise ratio tests2 to test the quality of the video and audio within Jitsi Meet. This module is also used to run all the available tests, for the web version and the mobile version of the product.

Manual quality processes

Jisti Meet uses the conventional methods of manual quality assurance. The first one is code review, which is nowadays done on almost every larger scale project.

Another process is manual testing, which can be done in multiple ways. Jitsi uses a beta test server that can be found here 3, which runs the latest development version and is used by developers to test their newly added features and check if everything still functions the way it should. Unfortunately, this process results in commits being reverted due to the introduction of bugs.

Continuous integration processes

As stated previously, Jitsi Meet has implemented a basic continuous integration process. This process is set up in GitHub4 and checks if the PR can be merged without issues.

First, the job is setup by pulling the right repository and downloading the correct and most recent dependencies with npm install. After this, npm run lint5 is executed to detect code errors. If no issues occur the build process will be executed. For back-end code unit tests are run to make sure the code does not introduce new errors. Next to this, stress tests are run. After the code has been reviewed, and corrected if necessary, the code can be merged with the main branch.

Test processes

An automatic testing pipeline is not built into the Jitsi Meet repository, thus code coverage is not taken into account. The repository contains only one JUnit test file where only for android a IPv6 to IPv4 class is tested. Rather it contains a testing mode with a testing toolkit that makes it possible for testers to simulate other users and to print more output which is necessary for manual testing. There are also markdown files present in the repository that help explain how to test certain features e.g. virtual backgrounds.

Most of the manual testing is performed while doing code reviews. However, there are also testing releases which can be freely downloaded and deployed, or can be used on the beta test server3. This server can be seen as an environment to find bugs in the beta version of the program, that can potentially be fixed before it will be used in the stable version. Furthermore, this stable version of Jitsi Meet is also used by many community members and bugs are found and reported by them on the GitHub repository.

There is however an automatic testing suite containing unit tests that can be run on Jitsi Meet instances. This separate repository called Jitsi Meet Torture contains 462 unit test that test several Jitsi Meet features such as muting, peer2peer video calling, using the chat and other key features. The testing can be done for IOS, Android and web, which are all the platforms that Jitsi Meet can be used on. The testing is only used in some cases where it is used in combination with the continuous integration by a Jenkins instance running Maven tests. The test results can be viewed on an automatically generated web page.

Example results from automatic testing run on a pull request can be found here6.

The automated testing prevents many regression issues. However, in order to analyze future problem areas we perform a hotspot analysis.

Hotspot components

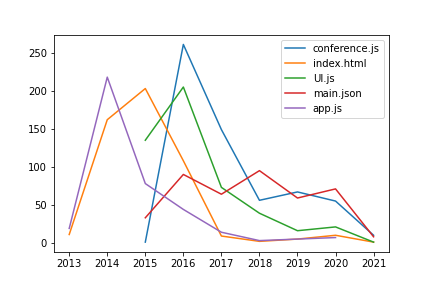

Jitsi Meet currently boasts a history of 8338 commits, 1242 of which are covered by the npm package files, package.json, and package-lock.json, which get automatically and inevitably updated for every dependency that is added. Besides these two, the top five hotspot files are:

- jitsi-meet/conference.js, with 535 commits.

- jitsi-meet/index.html, with 494 commits.

- jitsi-meet/modules/UI/UI.js, with 454 commits.

- jitsi-meet/lang/main.json, with 395 commits.

- jitsi-meet/app.js, with 367 commits.

Figure: Plot of commits per year for the top 5 hotspots

Conference.js contains the high-level logic for the React client. The commit history of this file shows mostly atomic fixes and feature additions.

Obviously, index.html is the root web page for the client. This file was mostly changed a lot between 2015 and 2016. This is because before 2016 all React components were included in this file and in 20167 these components were refactored to different files, including conference.js.

Main.json is the main English language file, containing all translations for strings, to which all relevant strings are added in small batches. All other translation files are added in larger batches, so, therefore, have shorter commit histories.

App.js contains the React logic for the main app window. Most commits are from before 2015, when the majority of the window code was contained in this file. In 2015 this bulk was moved to conference.js, and now app.js only contains the window initialization, which is why currently app.js has not been changed since 2020.

Since these files many of these files are not hotspots anymore due to architectural changes, we should analyze the hotspots of the past year.

- jitsi-meet/config.js, with 88 commits.

- jitsi-meet/lang/main.json, with 69 commits.

- jitsi-meet/conference.js, with 54 commits.

- jitsi-meet/react/features/base/config/configWhitelist.js, with 41 commits.

- jitsi-meet/lang/main-de.json, with 40 commits.

Again the language files and conference.js are changed often, along with two config files. What usually happens for the config file is that an implementation is made in a different file and a commented line is added to the config file. This is done to give users some freedom in their own deployment, but the code is not used in the main deployment of Jitsi.

Code quality

In order to automatically analyze the code quality, ESLint is enabled, configured with the recommended settings by eslint8, which strictly supervises code quality. Every commit has to pass the linter in the CI pipeline, so they necessarily follow the style guide if they are accepted. Sparsely inline disabling rules are used to circumvent the linter. An example of this is in config.js, where a lot of options are commented out code and would be rejected by the linter.

Even though it is the explicit strategy adopted by the community, it still feels like a workaround. A better way would be to add the options in the config file but not use them. The refactoring mentioned in the hotpot analysis is a great example of the team reducing class size. The index.html file became too cumbersome so the elements were extracted. The same holds for the app.js file. Because of this, the hotspot analysis supports the team’s view on the matter.

Quality culture

While some code quality can be maintained with the use of a linter, not all code smells and code with technical debt will be found. Therefore, the community of Jitsi enforces code reviews for each pull request (PR) that is submitted to the repository. Within these PRs, we can find the general quality culture, which will be described in this section. We will use 10 pull requests (PR) and their related issues for these findings.

The consensus we gathered from the PRs we reviewed, was that mostly those that were submitted by the paid staff from Jitsi were reviewed and merged much quicker compared to PRs that were submitted by a community member. This makes sense, since the employees of Jitsi have a planning to finish their issues as soon as they can, while there is less time pressure for other PRs to be merged or reviewed.

- Numbers in the room name add too many spaces to the Tab Title: The related issue has been submitted in April 2020, a user noticed his room name in the tab title

PartnerC4DTwas changed toPartnerC 4 DT. Two weeks later the bug was confirmed by a project volunteer, after this no response came in for 6 months until January 2021 when a contributor submitted a first commit. After some technical discussion between the paid Jitsi team and the contributor changed code was pushed. Until today this code has not been merged. - Normalize language format: In december 2020 a user noticed the language is not detected correctly on iOS devices. Two months later a contributor found the cause of the bug, after a review by the paid Jitsi team the ticket was merged without comments.

- Please bump lodash: The Lighthouse plugin (developer plugin) detected an outdated lodash version with a security vulnerability. The ticket was assigned by a paid Jitsi team member and merged the same day.

- Fix refactor preloading to avoid CORS issues: This PR fixes an issues reported by a community member. A new release introduced a bug in the Referrer header which caused profile images to not load. A paid team member found a workaround and implemented it two weeks later, after review it was merged the same day.

- Make German translation gender-neutral: A German user noticed Jitsi is not gender-neutral in the German language. After approval, he changed the language file himself. After approval, it was merged the same week.

- Add virtual background flow: In this PR, we can see that the reviewer is focused much on functionality, as well as consistency. This is something that is important in a large project like Jitsi Meet.

- disable blur button if camera is off In a simple PR like this, the reviewer points the programmer into a part of the code to simplify the code that is written. This keeps away technical depth and teaches others about the possibilities within the code.

- Redesign web toolbar In this PR, multiple reviewers point out different issues to the programmer. This is good as this shows the involvement of different people with different expertise to look at a PR to cover different scenarios that might break in the program.

- Tflite blur effect In this PR, even though the functionality is to optimize the blur effect used in Jitsi, the reviewer still comments on parts of the code that are related and will help with clean-up of the code in general. This is something that is not overdone which is good, as this can be a pitfall to keep focussing on improvements rather than merging the issue at hand.

- Expose participants info array In this PR the reviewer states their main concern and helps the programmer to look at the most important issues that may arise. This way of helping works well for reviewing and maintaining code standards.

Technical debt

Technical debt consist of choices that are made to improve the program in the short term, while delaying possible problems for the long term. In the case of Jitsi Meet there seems to be a limited amount of debt.

Building and deploying the project is only 2 commands, thus build dept is almost absent. Furthermore, the project uses extensive code linting and strict code reviews so code debt is also not a large issue.

Jitsi Meet could be argued to be suffering from test debt and test automation debt. However, it was consciously decided to not include automatic testing and continuous integration into the main repository. Therefore, it can’t be seen as debt.

The documentation of the Jitsi Meet project is currently hosted as a wiki on GitHub. It is called the Jitsi Meet Handbook and contains getting started information for developers, users and for self-hosting. The documentation however is still missing some essential components that are promised to be added. One of those components that is still missing is an architecture graph9 denoting the structure and context of Jitsi Meet. This can be seen as an example of documentation debt.

References

-

Jitsi torture - The Jitsi Torture Test repository. ↩︎

-

Peak Signal-to-Noise Ratio as an Image Quality Metric - ni.com ↩︎