In the previous blog post, we discussed the architecture of Kubernetes and the different views on it. This blogpost will focus on the quality and the (recent) evolution of Kubernetes. We will try to answer the questions including, but not limited to, What are some of the conventions used?; What is the role of test coverage?; Which components are the hotspots right now? How is the quality culture in general?; Does Kubernetes have technical debt? Please read along!

Quality Convention

To contribute to the Kubernetes project, all contributors are asked to follow the coding conventions of Kubernetes1. These conventions are a collection of guidelines, style suggestions, and tips for writing code in the different programming languages used throughout the projects and cover the following topics:

- Code conventions

- Testing conventions

- Directory and file conventions

Code Conventions

The code conventions are about the two most used programming languages used in the Kubernetes project. The first is bash and the conventions are following the Google shell styleguide2, also the developer has to make sure that the code can build, release, test, and run cluster-management scripts on macOS. On the Go language there exists several conventions spanning subjects such as APIs, comments in code, code review comments, naming and many more. These conventions are in place to assure that the code quality is identical in all files throughout the project.

Testing Conventions

The testing conventions are in place to make sure that all newly developed code is properly tested according to the size of the added functionality. I.e. when a developer creates a significant feature, they should come with matching integration and/or e2e(end-to-end) tests. Kubernetes has a Special Interest Group (SIG) solely dedicated to testing where all the details and conventions are mentioned3.

Directory and File Conventions

Directory and file conventions exist to make sure that the project is clarifying and uniform. Examples of these types of conventions are:

- Filenames should be lowercase.

- Go source files & directories use underscores, not dashes.

- Document directories & filenames should use dashes rather than underscores.

Continuous Integration (CI)

Automation tools are in place to streamline the process of contributing as well as aiding the developers by relieving them from the repetitive, simple work4. Kubernetes uses Prow (which was developed by Kubernetes) for CI5. The automation of CI consists of two stages: Tide and PR builder.

Tide

Tide is a component of Prow that manages a pool of GitHub PRs that match a given set of criteria6. Tide automatically retests PRs that meet the criteria in a pool and merge them when they have passed the tests.

PR Builder

The PR builder is a robotic process that attempts to run the tests for each PR made by a developer. If the developer is an unknown user, the PR bot(@k8s-ci-robot) asks a trusted developer from Kubernetes to sign off on the PR to check if it is safe. If the trusted developer replies with /ok-to-test the PR builder will begin CI testing. The trusted developers are mentioned in each directory where a unit of independent code is found, in a document called OWNERS7 8. It mentions the current reviewers and the approvers of that directory.

Within this CI, it could be possible that certain tests fail and pass another time (flaky tests). In the PR the developer has an option to /retest if he or she believes such a flake has occurred. These flakes do have to be reported as separate issues to the project.

Test Processes and the Role of Test Coverage

Kubernetes' test processes can be divided into three main categories: unit testing, integration testing, and end-to-end testing.

End-to-end (e2e) testing means testing the workflow of a system from beginning to end9. According to a post on the Kubernetes Blog from the 22nd of March 201910, e2e-testing became a difficult task in Kubernetes since many of its components ended up being developed independently from Kubernetes. However, in Kubernetes 1.13 (the current version is 1.20.5) the e2e-framework was updated to include these external components. The main focus of the update was on choosing the correct dependencies and not including all the existing packages, hence making the process shorter and easier to manage. E2e-testing happens in three steps: creating the tests, building a test cluster, and running the tests on this cluster. The tests are written in Go and use Ginkgo11, a behaviour-driven development framework and Gomega12 for the assertion statements. Kubetest can be used as the testing cluster13.

Accordingly, the testing processes are very well established and have a clear and directed purpose. Moreover, the testing frameworks change together with the development of the source code to ensure the quality of the system. This can also be seen in the fact that Kubernetes offers developers the possibility to identify test coverage and see for themselves where vulnerabilities exist and where improvements can be made.

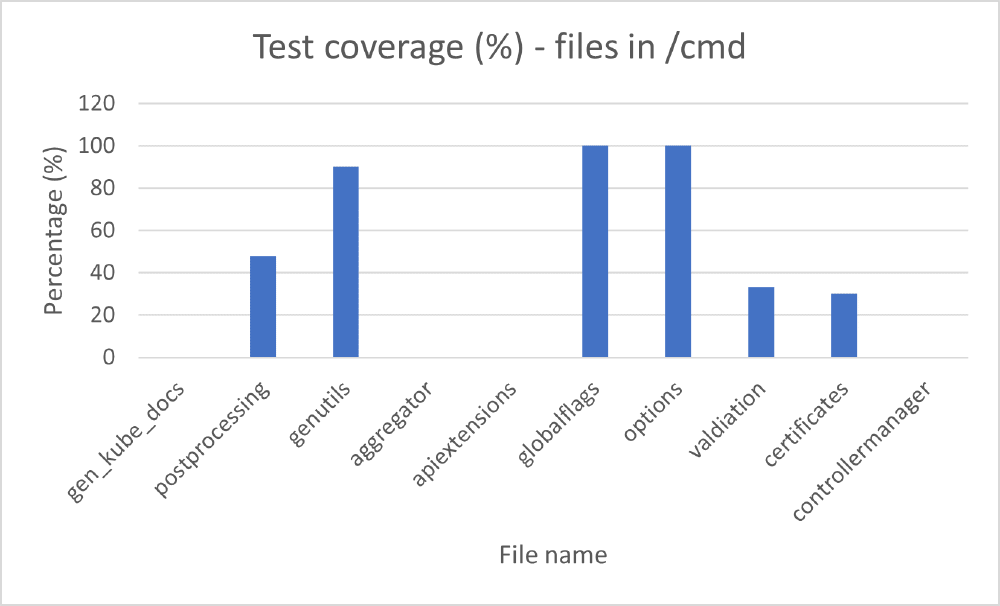

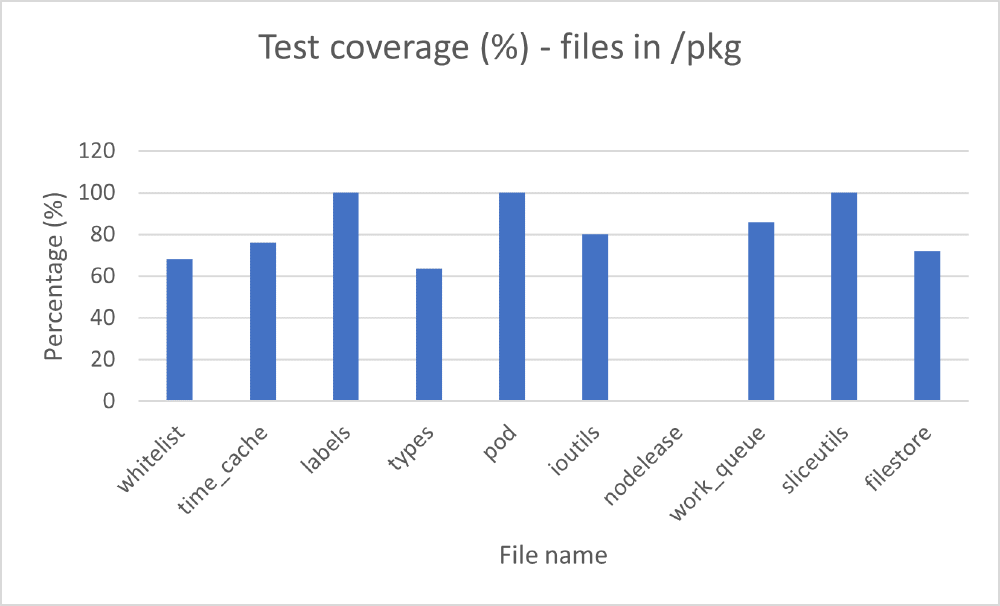

We have run the command provided by Kubernetes14 and obtained a coverage percentage for every file in the system. The lines of code that are not covered by tests appear in red and the ones that are covered appear in green. This makes it very easy to see where developers can contribute. The following graph presents a few files from two important folders of the system (cmd and pkg):

Figure:

Test coverage for part of cmd module

Figure:

Test coverage for part of pkg module

When analysing the code coverage, we see that most of the files have over 50% coverage, but there are also files with 0% coverage. However, small files with only a few short functions represent many of the files with no coverage.

Hotspot Components Through Time

From March 2018 up until the start of 2019, the main development activity was focused on testing, cluster federation, machine learning, high-performance computing workloads, and the ingress controller for load balancer from Amazon Web Services (AWS)15. We can see that the main hotspot components from 2014 up until 2018 were an orchestration tool to manage containers on several hosts called kubespray16, a container runtime for Kubernetes called CRI-O17, and AWS related components18. It is interesting to note that recently, near the end of 2020, Kubernetes deprecated Docker as a container runtime19. This is due to Docker not being designed to be embedded inside Kubernetes, which can cause problems. Therefore, we can expect that Kubernetes will focus its developments on other container runtimes, such as CRI-O17.

The previously described main hotspot components seem somewhat unrelated and are quite large projects on themselves. This is because the software is constantly updating and upgrading its components to eventually reach a level of maturity where it is employed in enterprise production IT environments15. The overwhelming complexity of the system has been mentioned by users20. This complexity leads to a lot of parallel development processes and extensions to the software, which makes it hard to distinguish hotspot components as a whole. It is easier to discern hotspot components at a subsystem level, such as storage, networking, and scaling. These are specified by the Kubernetes SIGs21.

Hotspot Components' Code Quality

It can be seen in the number of commits to the Kubernetes repositories that the main Kubernetes project has had a relative decline in the number of commits, while the testing infrastructure' number of commits has seen a relative increase. It is interesting to see that Kubernetes has seen an average number of languages used of around 25. A large software project like Kubernetes uses multiple languages and the community is actively managing what languages are used and what languages are dropped. This shows that the contributors care about using the right tool for the right job18.

However, there do seem to be some problems with the codebase as described in a security review from 201922. In this report, it is said that the codebase is large and complex, with large sections of code containing minimal documentation and numerous dependencies. This means that logic re-implementations could be cleaned up by centralizing it into libraries to reduce complexity, facilitate easier patching, and reducing the burden of documentation across disparate areas of the codebase.

The Quality Culture

Before it is even possible to merge a PR, it needs the following labels: /lgtm, /approved, and cncf-cla:yes. The following labels need to be absent: do-not-merge, do-not-merge/blocked-paths, do-not-merge/cherry-pick-not-approved, do-not-merge/contains-merge-commits, do-not-merge/hold do-not-merge/invalid-commit-message, do-not-merge/invalid-owners-file, do-not-merge/needs-kind, do-not-merge/needs-sig, do-not-merge/release-note-label-needed, do-not-merge/work-in-progress, and needs-rebase23. Most labels can only be set by selected members, which are described in the OWNERS file8.

Before a contributor makes a PR, they must run the commands make verify, make test and make test-integration24. This means that a lot of things have to be verified, all unit tests and integration tests must pass. As we can see from different PRs that got merged in the past25 26 27 28 29, the contributor says what the PR is about and sets certain labels if needed and possible. Then the CI bot ‘k8s-ci-robot’ adds labels to the PR it can detect automatically such as its size, whether the contributor has signed the cncf-cla, and that it needs an ‘ok’ from a certain Kubernetes member to start all the tests. Additionally, this (or another) member needs to also indicate that the PR is ready to be actively worked on (/triage-accepted)30.

Members also try to correct certain quality attributes, such as structure in files. The following quote is from a comment on a PR25: “I believe this content should be auto-generated based on structured comments like this above the tests and a run of hack/update-conformance-yaml.sh. There’s a bit more info in the conformance test readme.”. The contributor reacts with gratitude to this comment. This shows that it is normal to correct these quality details.

Every contributor is polite and tries to take the quality of every little thing to the highest standard possible. It is often the case that even when discussing a choice of words or a way of programming certain things, that both sides of the discussion are right, but that they try to come to a middle ground where both parties are happy and the quality of the code is still kept high. In most discussions, people reacting to a PR also tend to ask questions to start a discussion instead of saying that something is wrong25 26 27 28 29.

Technical Debt

To identify the technical debt of the system, we used Sonarqube31. Sonarqube is a static analysis tool that can identify bugs, system vulnerabilities, and gives an indication of technical debt. It covers 27 programming languages (including Go). The following information can be extracted from the analysis:

| Folder | Technical debt | Lines of code | Comment percentage |

|---|---|---|---|

| build | 0h | 62 | 35.4 |

| cluster | 5h 56min | 3,538 | 16.3 |

| cmd | 17d | 65,191 | 17.7 |

| hack | 6h 42min | 939 | 22.3 |

| pkg | 177d | 566,026 | 15.4 |

| plugin | 6d 1h | 25,404 | 12.8 |

| staging | 445d | 864,121 | 14.4 |

| test | 39d | 175,023 | 15 |

| third_party | 3h 45min | 2,313 | 15.1 |

(d = days; h = hours; min = minutes)

Moreover, we ran the analysis on the Kubernetes components from /cmd, identified in our previous blogpost Product vision and Problem Analysis:

| File | Technical debt | Lines of code | Comment percentage |

|---|---|---|---|

| kube-apiserver | 3h 7min | 2,092 | 17.6 |

| kube-controller-manager | 4h 27min | 4,190 | 20.7 |

| cloud-controller-manager | 15min | 226 | 32.7 |

| kube-proxy | 5h 59min | 2,524 | 13.6 |

| kube-scheduler | 5h 35min | 2,482 | 12.2 |

| kubelet | 5h 10min | 2,405 | 22.7 |

We observed that the comment percentages in the first table are under 50% in all of the folders and that the technical debt of the Kubernetes components in the second table is very low. The latter indicates that the components are well managed and have high quality.

A good strategy is incrementally minimising the technical debt32. The developer can focus on refactoring files that are frequently used or that implement important features. This method could work in Kubernetes as well because it is a complex codebase and developers should not waste time working on deprecated parts of code. Hence, technical debt should be avoided if possible and reduced if required.

References

-

https://github.com/kubernetes/community/blob/master/contributors/devel/sig-testing/testing.md ↩︎

-

https://github.com/kubernetes/community/blob/master/contributors/devel/automation.md ↩︎

-

https://github.com/kubernetes/test-infra/tree/master/prow ↩︎

-

https://github.com/kubernetes/test-infra/tree/master/prow/cmd/tide ↩︎

-

https://github.com/kubernetes/kubernetes/blob/master/cmd/OWNERS ↩︎

-

https://github.com/kubernetes/community/blob/master/contributors/guide/owners.md ↩︎

-

https://www.browserstack.com/guide/end-to-end-testing#:~:text=End%20to%20end%20testing%20(E2E,for%20integration%20and%20data%20integrity. ↩︎

-

https://kubernetes.io/blog/2019/03/22/kubernetes-end-to-end-testing-for-everyone/ ↩︎

-

https://github.com/kubernetes/test-infra/blob/master/kubetest/README.md ↩︎

-

https://github.com/kubernetes/community/blob/master/contributors/devel/sig-testing/testing.md#unit-test-coverage ↩︎

-

https://containerjournal.com/topics/container-ecosystems/code-analysis-finds-kubernetes-approaching-level-of-maturity/ ↩︎

-

https://www.redhat.com/sysadmin/kubespray-deploy-kubernetes ↩︎

-

https://medium.com/sourcedtech/an-analysis-of-the-kubernetes-codebase-4db20ea2e9b9 ↩︎

-

https://kubernetes.io/blog/2020/12/02/dont-panic-kubernetes-and-docker/ ↩︎

-

https://github.com/kubernetes/community/blob/master/sig-list.md ↩︎

-

https://github.com/kubernetes/community/blob/d538271/wg-security-audit/findings/Kubernetes%20Final%20Report.pdf ↩︎

-

https://github.com/kubernetes/community/blob/master/contributors/devel/development.md ↩︎

-

https://github.com/kubernetes/kubernetes/labels?page=6&sort=name-asc ↩︎