The previous two essays described the product vision and architecture of pip. This essay will discuss the code base and software quality processes of pip. pip utilises continuous integration, tests and thorough code review to keep the code quality up to standards. Continuous integration runs several checks to ensure that all tests pass and all code adheres to pip’s code style. Thorough code review pushes maintainers and contributors to write efficient solutions.

pip’s software quality process

pip has three main processes which they use to keep their software quality up to a high standard. One of them, which is discussed directly after this, is their intricate and detailed continuous integration (CI) pipeline. The other two, although it can be discussed whether the latter is considered a process, are their thorough code reviews within pull requests (PR) and their documentation.

Reviews are done by two types of reviewers, the first being the obvious, humans. Each PR is thoroughly read, judged and commented by active code maintainers. Usually one or two people review and approve a PR before being merged. The other type consists bots and/or automated scripts. We have considered these to be reviewers as they use the commenting section of PRs to give automated feedback. Examples of these are CI and BrownTruck1, which is a bot that automatically flags PR to be unable to be merged due to a variety of predefined errors.

Finally, pip has very detailed documentation2, allowing both current code maintainers as well as possible future maintainers to easily access what code should do and why. They also require code maintainers to update documentation on every PR. This is done to keep it up to date and to make sure that nobody has to question pieces of code as it is an open source project.

Key elements of pip’s continuous integration processes

pip’s continuous integration is quite detailed for a command line program. When a contributor creates a PR, the CI of pip automatically performs 24 checks. These checks include two Linting checks (for Ubuntu and Windows), 4 versioning tests (both unit and integration tests) for four versions of pip on each of the three operating systems. These are double for Windows due to the two types of architectures (x64 and x86). And the rest of the tests cover the validity of the documentation, quality checks, packaging and vendoring. Running all these tests combined takes at least a couple of hours.

pip distributed their test suite and checks over three different platforms that provides them with free executors for open source packages: GitHub Actions3, Azure DevOps CI4 and Travis CI5.

Linting and testing of code is very common, possibly even a standard on most bigger repositories nowadays. However, pip stands out in the fact that they have documentation CI, which is not CI of the documentation, but rather a separate file that documents your PR. All PRs, even the ones that do not change the code, but purely documentation, require the contributor to add a “news file”, managed by towncrier6, which is a utility that summarizes these news files. The contents of a PRs news file is to provide a description of what changes your PR makes to the documentation and/or codebase. The contents of news files can be compared to commit messages, as they are short and to the point, usually a single sentence. Each news file has a type, describing to the user as well as towncrier what the PR is about before even reading the contents. Examples of these include (but are not limited to): feature, removal, bugfix, doc, trivial, vendor and process.

Test processes

pip’s tests are written using the pytest7 test framework, mock8 and pretend9. The pytest test framework is used for all kinds of tests that pip uses: unit, integration and functional testing. Mock is a library for mocking objects for testing purposes. Mocking is creating objects that simulate the behaviour of real objects without having to worry about the behaviour of dependencies. Pretend is used for stubbing. Basically a stub is an object that returns pre-canned responses, rather than doing any computation.

Tox10 is used to automate the setup and execution of pip’s tests. pip’s documentation recommends to run the tests in parallel, since running it sequentially might take a very long time.

As mentioned before, pip writes both integration and unit tests for its code base. pip does not require new code to be tested as the CI does not check for a decrease in coverage. They do however require new functions to be tested. At the moment of writing, pip’s code base consists of 2728 tests.

It is very simple to run the tests (unit, integration, functional) locally with the command:

$ tox -e py39 -- -n auto

py39 refers to Python version 3.9 and could be replaced with a different Python version for testing purposes. The argument -n auto means that the test will be ran parallel. If you want the test to run sequentially, you could remove this argument.

To test linters locally, users can use the following command:

$ tox -e lint

The linter checks whether the codebase still conforms to pip’s coding style and that all new code is being documented.

As said earlier, the CI contains automated tests to check whether the new changes do not break any previous functionalities (regression tests) and that new functionality behaves as expected. The automated tests and linters will be run on the following operating systems: MacOS, Windows and Ubuntu (Linux).

Hotspot components from past and future

Hotspot components are components which have been involved in many code changes. This section will go over some past and possible future hotspot components. It is very useful to have some insights of future hotspot components, since it might be useful to start improving quality of these components now, so it will cost less to refactor these components in the future.

To find hotspot components in the pip code base, we ran an analysis using CodeScene11 and analysed the commit history using the following command:

git log --pretty=format: --name-only | sort | uniq -c | sort -rg | head -100

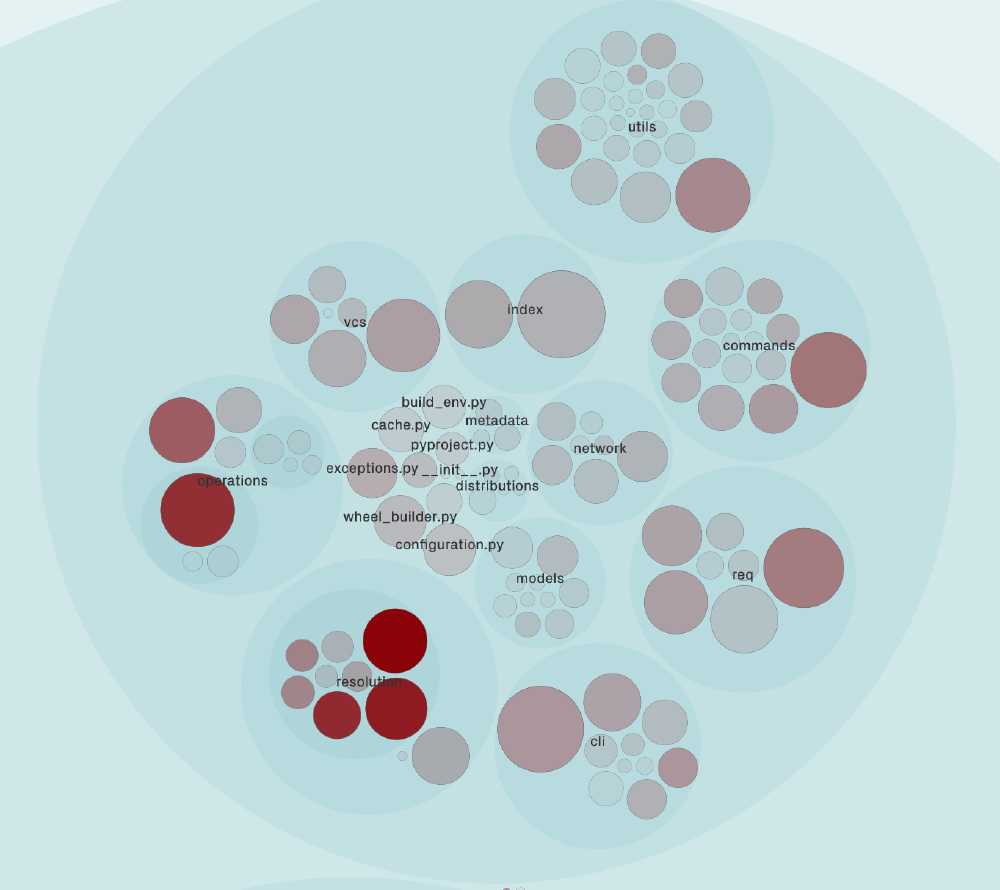

The figure and table below show what the hotspot components from pip are. Most of the hotspots components are regarding operations, commands and the resolver component. As mentioned in the pip 20.2 release notes, they have recently updated their resolver12. This new resolver is more consistent than the old resolver when dealing with incompatible packages. Inconsistencies with incompatible packages in the old resolver resulted in many code changes, since these inconsistencies had to be solved.

Figure: Hotspot components pip using CodeScene code analysis

| File | Code changes |

|---|---|

| req/req_install.py | 382 |

| operations/prepare.py | 195 |

| commands/install.py | 190 |

| req/req_install.py | 173 |

| setup.py | 149 |

| req/req_set.py | 149 |

To find future hotspot components, we analysed the documentation and the code base. Although pip does not have a specific roadmap, they mention in the documentation13 that most code changes will appear in the resolver component and that they are also looking for future refactors in this component. The maintainers of pip are also aware of this component being a future hotspot component. Other parts of pip’s source code that might be a future hotspot component, are the actual operations as mentioned in the pip documentation13. These are also implemented in very large files and should be refactored into smaller files.

Code quality analysis

When analysing the code quality of pip, there are a many different factors which one can take into account, such as cyclomatic complexity and lines of code (LOC) per file. For our initial code quality analysis, we used CodeScene11 to give us an overview about available metrics.

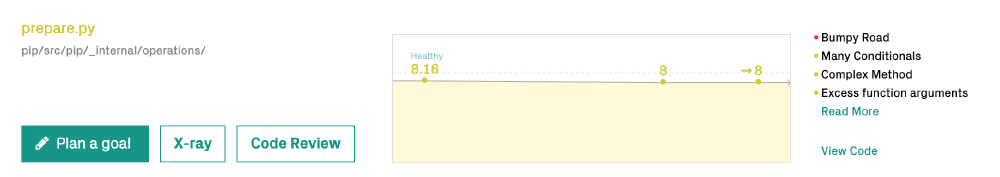

When analysing files that received a low code health grade, it showed that there are common issues among these files. The metrics that these files scored bad on contained the bumpy road code smell14, many function arguments and they are too complex.

Figure: CodeScene quality analysis prepare.py

Some extra analysis has been done on the hotspot components found in the previous section. A few things that stood out the most in these files15, are that these files were very long, contained many methods and the class contructors had many parameters. Mostly this was in line with the results from the analysis from CodeScene11. Another remarkable aspect that we also saw in their code base, was that they have different classes in one file. Although these code smells could have been prevented by linters, the current linters do not check for these. Refactoring files with these code smells would have a huge impact on the code base. In the future, this could be improved by refactoring all these big files and adding checks for large files to the linters. This would however be unfeasible until these files have been refactored.

So overall, pip scores decent on code quality and sometimes they score bad on complexity and LOC. However, as mentioned in the documentation of pip13, the maintainers are fully aware of these code smells and are planning to change these in the future.

Quality culture

This section will discuss what the quality culture of pip is when it comes to issues and PR. This will be done through examples of discussions in issues or PRs of pip.

A selection of issues and their respective PRs that are observed for this evaluation are shown in the table below. These issues are picked based on their representative discussions and reviewing process. When observing these issues, one should have a good picture of how pip’s quality culture is and how it is maintained through the means of issues and PRs.

| Issue number | Status | Pull request number | Status |

|---|---|---|---|

| 9139 | closed | 9405 | merged |

| 9516 | closed | 9522 | merged |

| 5948 | closed | 5952 | merged |

| 4390 | closed | 9636 | merged |

| 9677 | open | - | - |

| 5780 | open | 6401 | closed |

| 9617 | open | 9626 | merged |

| 3164 | open | 7499 | merged |

| 7279 | closed | 7765 | closed |

| 1668 | open | 7002 | merged |

Looking at the issues, quite some of them are extensively discussed with maintainers to pinpoint the problem in the project. These discussions can lead to either a PR which solves the issue or an abortion of solving the issue. Whenever it leads to a PR, the request will be reviewed by maintainers. Even in the PR, quite some discussion can be found on issues or missing points. For trivial issues and solutions, less discussion is required. The PR need an approval of someone part of the Python Packaging Authority16 before it gets merged. Sometimes, a second opinion is needed which will be marked with a tag (S:needs discussion; S:needs triage).

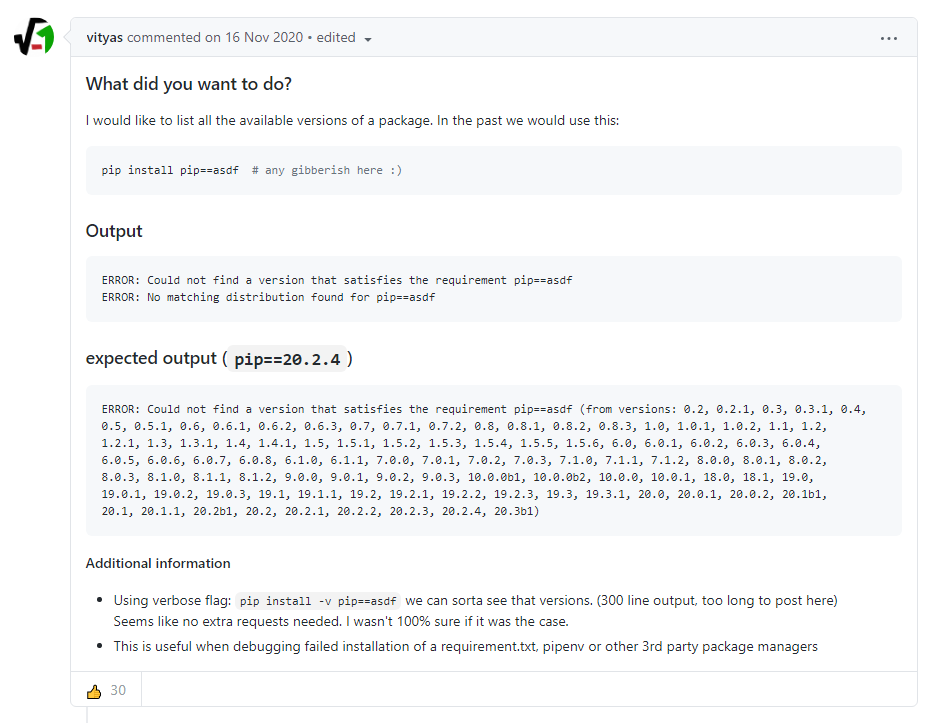

As example, the image below shows how issue 9139 started. The author of the issue raised a problem through an example of how the current output is and an example of how the actual output would be expected for a certain command. This issue is followed by a discussion among maintainers. They mentioned that the problem the author was getting through his command was deliberately removed from the code due to updates in the resolver of pip. Instead, a new issue was raised to remove the legacy resolver and resolved through PR 9405. This would be less confusing for future users of pip and issue 9139 would not be raised anymore.

Figure: Report of issue 9139

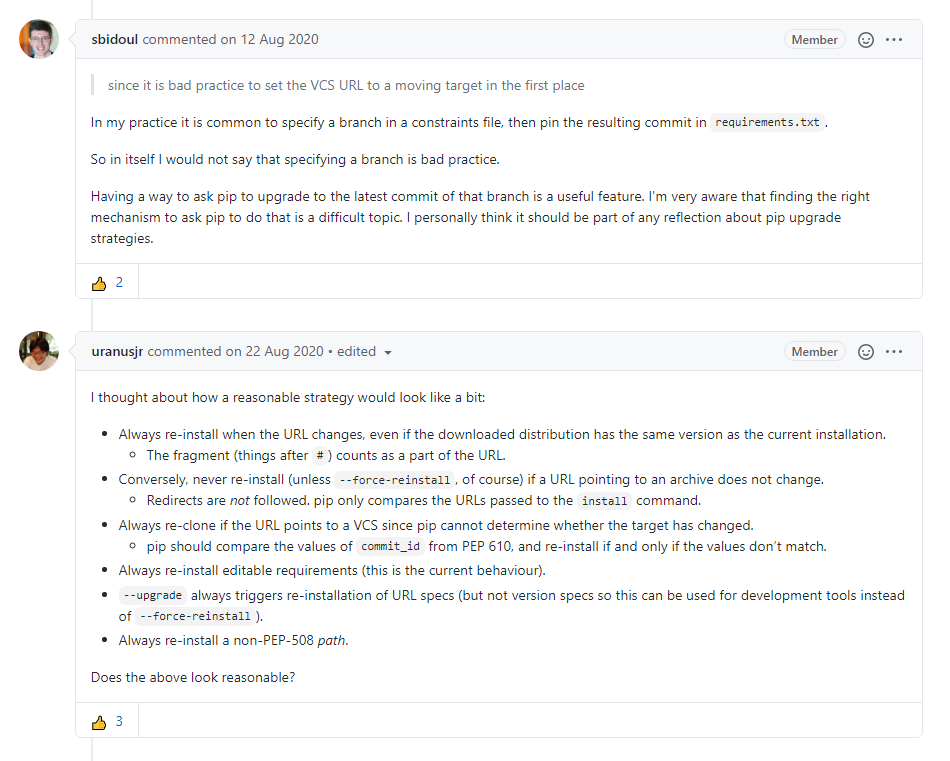

Issue 5780 is an example of multiple attempts to resolve the issue. This issue is related to the resolver of pip. In the previous section, we can see that the resolver goes through a lot of changes. With these changes going on, the solution for the issue needs to update itself such that it works for the new resolver as well. Possible approaches to handle this issue are raised through either comments or PRs. In the image below, a comment with a possible approach is introduced. Since this issue is not of top priority, the issue is being resolved rather slowly.

Figure: Uranusjr proposing a possible solution to tackle issue 5780

The quality culture of pip mostly revolves around good discussions about possible solutions and verifying them through at least one member of the Python Packaging Authority. The maintainers of pip make use of tags to keep track of what issues need to have a second opinion and what kind of issue it is.

pip’s technical debt

According to Martin Fowler, systems are prone to the build up of deficiencies in internal quality which makes extending and modifying the system harder17. This section will discuss some of the technical debts that are present within pip.

A technical debt that exists within pip is legacy code. Quite an amount of issues are targeted at removing legacy code or overall clean-up of the code base. The issues piled up and a project was started in 2020 to clean the internals of pip 18. The effects of legacy code can for example be shown with PR 9631, where test cases are running on the legacy resolver.

As mentioned before, pip has a bad score on complexity and LOC. The complexity and LOC of pip make it harder for developers to read and add additional code to the code base, thus making it harder to develop. This is a technical debt of which the maintainers are fully aware of and planning to change.

References

-

BrownTruck Bot, https://github.com/pypa/browntruck ↩︎

-

pips documentation, https://pip.pypa.io/en/latest/development/ ↩︎

-

GitHub Actions, https://github.com/features/actions ↩︎

-

Azure DevOps, https://azure.microsoft.com/en-us/services/devops/ ↩︎

-

Travis CI, https://travis-ci.org/ ↩︎

-

towncrier, https://pypi.org/project/towncrier/ ↩︎

-

Pytest, https://docs.pytest.org/en/stable/ ↩︎

-

Pretend, https://pypi.org/project/pretend/ ↩︎

-

CodeScene, https://codescene.io/ ↩︎

-

pip release 20.2, https://discuss.python.org/t/announcement-pip-20-2-release/4863 ↩︎

-

pips anatomy, https://pip.pypa.io/en/latest/development/architecture/anatomy/ ↩︎

-

CodeScene Bumpy Road Code Smell, https://codescene.com/blog/bumpy-road-code-complexity-in-context/#:~:text=complex%20state%20management.-,The%20Bumpy%20Road%20code%20smell%20is%20a%20function%20that%20contains,becomes%20an%20obstacle%20to%20comprehension. ↩︎

-

pip

req_install.pyfile, https://github.com/pypa/pip/blob/master/src/pip/_internal/req/req_install.py ↩︎ -

Python Packaging Authority, https://www.pypa.io/en/latest/ ↩︎

-

Technical Debt, https://martinfowler.com/bliki/TechnicalDebt.html ↩︎

-

Internal Cleansing project https://github.com/pypa/pip/projects/1 ↩︎