In our previous blog post, we went in-depth on the topic of quality and evolution. Today, we are going to discuss the sustainability aspect of Kubernetes. We try to answer questions such as: How green are Kubernetes and the cloud? How can you use Kubernetes sustainably? Is the work environment safe, respectful, and encouraging development? Which aspects of the project organisation could be further improved to integrate sustainability? Read along to find out!

Energy Consumption

Energy consumption falls under the environmental sustainability of a system. We would like to see how Kubernetes manages this since it is a deployment container with its biggest impact on the environment being energy consumption.

Kubernetes and the cloud

There is active development going on in the cloud computing community which ensures more efficient use of computing power. In 2015, it was already mentioned that customers of Amazon Web Services use 77% fewer servers with a resulting 84% decrease in power consumption1. The amount of computing done in data centres increased fivefold from 2010 to 2018, but the energy consumed by data centres only grew 6 per cent. This relatively minuscule increase in energy consumption in comparison to computing is attributed to the shift from old, inefficient data centres to computing in the cloud. To put this into perspective, 1-2 per cent of global electricity consumption was done by data centres in both 2010 and 20182 3. Not shifting the computer power to the cloud would have led to a very large portion of global electricity being consumed by data centres.

In addition to increasing computing efficiency, there are also efforts to reduce carbon emissions by deploying Kubernetes nodes in geographical locations with a low carbon intensity of electricity4. Server utilization is highlighted by the National Resources Defense Council as the most important factor in determining the carbon footprint of server infrastructure5. Server utilization is one of the top reasons enterprises want to adopt Kubernetes6. Other than helping the environment, high cloud bills are a motivation for improving server utilization7. Kubernetes seems to be passively helping the environment by improving the efficiency of a company’s technical infrastructure.

Load balancing and the Kubernetes Scheduler

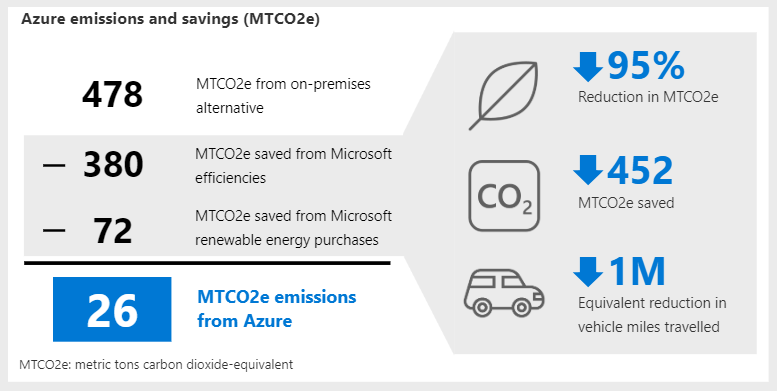

We can see that using Kubernetes can have a significant impact on the effect of your system on the environment. This can be achieved by efficient server utilization in combination with load balancing to ensure the best use of computing power8. Another factor worth considering is the Marginal Operating Emissions Rate (MOER) value of each node. MOER values reflect the amount of carbon emitted to create a megawatt of energy in pounds9. This is used by large companies such as the cloud provider Microsoft Azure. Microsoft has a monitor for its Azure Kubernetes Service to measure resource usage and optimise efficiency concerning sustainable software engineering10. They even provide the Microsoft Sustainability Calculator to help enterprises analyse the carbon emissions of their IT infrastructure11. In addition to promoting sustainable software engineering practices, large cloud providers are concerned with using green energy12. Cloud computing seems to be the way forward if we want to ensure efficient computing that has as little impact on the environment as possible. The following figure displays the Azure emissions and savings using the Microsoft Sustainability Calculator13.

Figure: Microsoft Sustainability Calculator for cloud computing.

In most cases, load balancing is time-efficient14. The sum of each individual (more simple) task will consume less energy than keeping tasks waiting while the system is handling the current task. If you need to execute a task that requires a lot of computing power, it is better to distribute it into subtasks over multiple systems. Therefore, it could be said that load balancing (in quantity) impacts the general energy consumption of the system. Additionally, load balancing could also impact your wallet. Electric utilities charge large commercial and industrial customers a ‘peak demand penalty’ in addition to the total usage of electricity over the billing period15.

The Kubernetes scheduler makes sure Kubelet can connect pods to the right nodes16. The default scheduler is called kube-scheduler and it distributes resources, such as memory and CPU time, to make sure these resources are available to run workloads. Furthermore, you can define your own rules within the scheduler. These rules apply to the assignment of pods to nodes. One possible rule for the scheduler could be taking carbon intensity data as a factor when placing pods17. The carbon intensity of electricity is defined by the amount of CO2 emissions produced per kilowatt-hour of electricity18.

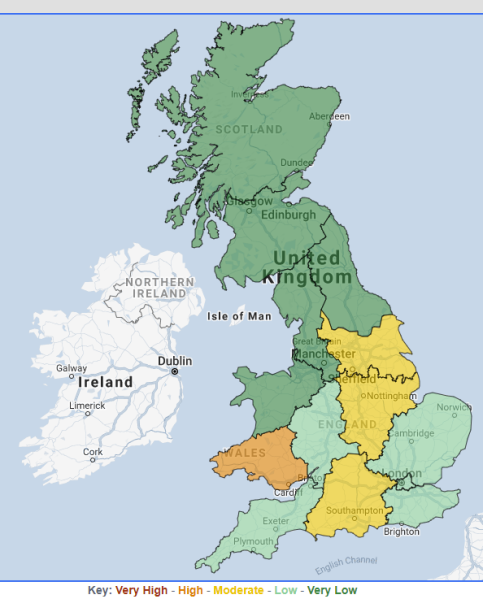

A great example of such a factor could come from the National Grid ESO’s Carbon Intensity API. This API provides forecast data on the expected regional carbon intensity of the electricity system in Great Britain18. The Kubernetes scheduler could consider the forecast when assigning nodes. Imagine a situation where the current carbon intensity forecast says that a certain server in a particular region of the UK is increasing in the next hour while there is another server in a different region available with a lower carbon intensity. Therefore, the scheduler could switch the workload to this other, lower carbon intensity, server. This switch would reduce energy consumption and in doing so, it would lower carbon emissions whilst keeping the same performance. You can look at the current carbon intensity of (regions of) Great Britain in the following figure18 and more information here.

Figure: Current carbon intensity of the United Kingdom.

Another example is the carbon intensity data API called WattTime19. Through its Automated Emissions Reduction (AER), WattTime produces MOER values9. This is illustrated in their interactive map of Grid Emissions Intensity by Electric Grid here. One can factor in the MOER of each of the available nodes' locations with scheduling. In this way, the Kubernetes scheduler can make energy consumption-aware, and therefore carbon-aware, decisions.

Not only Environmental Sustainability

So far we have seen how Kubernetes can reduce the negative effect it has on the environment, but we would also like to mention a few words about the technical and social sustainability of the system. We believe these are also important aspects to consider in the development of a system. We believe that a system that strives to be as green as possible, while being well-maintained in a respectful and safe working environment, is successful.

Technical Sustainability - Maintenance

Kubernetes can execute a process called draining20. This gives Kubernetes the possibility to disable all of its pods while working on it (e.g., during hardware maintenance). Multiple nodes can be drained in parallel.

You can also manage the resources for the CPU, the memory, or the API when creating a Kubernetes cluster21. Moreover, as a cluster administrator, you can also set a PodDisruptionBudget22 (PDB) for automated actions at the cluster level. PDB informs how many pods of an application can be taken down at the same time because of an intentional disruption caused by the administrator or the application owner (e.g. node draining).

Social Sustainability - Social Equity and Justice

Kubernetes has a clear set of rules to be respected by its contributors. These are called the *Contributor Code of Conduct". It states that any contributor is respectful towards other contributors and that harassment of any form is not tolerated. The maintainers of the system are in charge of removing any inappropriate content. Anybody can report such content to the Code of Conduct Committee.

The community values are considered to be one of the major reasons that caused the success of Kubernetes23. These values are:

- Distribution of workload;

- Prioritization of people before the product;

- Automation of repetitive code (they do not want to waste time manually working on such code because “heroism is not sustainable”23);

- Inclusivity of people with different skills and levels of experience;

- Evolution within the community by recognizing values and promoting them.

We think these values should be followed by any company to promote a healthy working environment.

Possible Green Improvements for Kubernetes

Based on all the information acquired so far, we still believe there are possible improvements for the ‘green code’ awareness of the contributors. After a brainstorm session we came up with the following ideas that Kubernetes could implement:

- A Dedicated Special Interest Group (SIG);

- Labels for green code;

- Bot measuring energy consumption for new features.

Dedicated SIG

Development of the Kubernetes project is mostly done by Special Interest Groups, each focusing on their part of the project. There exist many groups for different parts, such as APIs, authentication, or network24. These groups create, maintain, and improve their specific part of the project. A SIG can be created by anyone with valid reason and we think that creating a SIG to improve the ‘greenness’ of the code is a valid one. This SIG would not be looking to create or maintain new code on a distinct part of the project, but rather improve the code throughout the entire project in such a way that it reduces energy consumption and execution time, just like the examples mentioned before.

Labels for green code

In the Kubernetes GitHub, there are many different labels to assign to a contribution to indicate what it is about25. In addition to these labels, there could be a ‘green’ label to indicate that the corresponding contribution incorporates some sort of ‘green’ code feature or improvement to existing code. This label would give more attention to sustainable code and could give more ideas to contributors to use it as well as make it easier to filter through contributions on green code.

Continuous Integration improvements

Kubernetes has a Continuous Integration (CI) bot called ‘k8s-ci-robot’. The Kubernetes maintainers could add a feature to this bot that would measure the energy consumption of the improvement or new feature and compare it against the current build’s energy consumption. If it is marginally bigger and not following the expectation and explanation of the contributor, a consequence could be that the code should not be accepted, even though it passed all other tests. This change of acceptance could greatly improve the sustainable influence Kubernetes have.

Another improvement to the CI could be that the integration tests would not run for certain labels, but are just checked by an assignee (e.g., pull requests about typo fixes). Lastly, it could only run the tests applicable for the file the fix was in. This way, energy could be preserved by not wasting it on building the entire project while most of it wasn’t affected by the contribution and would still run anyway. This process would have to be defined by certain rules to make sure it was indeed not needed to build the whole project.

Figure:

k8s-ci-robot being environmentally aware.

Conclusion

In conclusion, we have discussed energy consumption, carbon emissions, the other side of sustainability, namely, technical and social sustainability, and we mentioned some ideas of our own to make Kubernetes even greener. We hope you enjoyed these blog posts and have become wiser than before you started reading them. If you want to (re)read our previous blog posts, you can find them here, here, and here. Thank you and take care!

References

-

https://aws.amazon.com/blogs/aws/cloud-computing-server-utilization-the-environment/ ↩︎

-

https://www.datacenterknowledge.com/energy/study-data-centers-responsible-1-percent-all-electricity-consumed-worldwide ↩︎

-

https://www.energy.gov/eere/buildings/data-centers-and-servers ↩︎

-

https://www.nrdc.org/sites/default/files/NRDC_WSP_Cloud_Computing_White_Paper.pdf ↩︎

-

https://www.replex.io/blog/announcing-state-of-kubernetes-report-replex ↩︎

-

https://www.theinformation.com/articles/as-aws-use-soars-companies-surprised-by-cloud-bills ↩︎

-

https://devblogs.microsoft.com/sustainable-software/carbon-aware-kubernetes/ ↩︎

-

https://www.watttime.org/solutions/renewable-energy-siting-emissionality/ ↩︎

-

https://docs.microsoft.com/en-us/azure/aks/concepts-sustainable-software-engineering ↩︎

-

https://azure.microsoft.com/nl-nl/blog/microsoft-sustainability-calculator-helps-enterprises-analyze-the-carbon-emissions-of-their-it-infrastructure/ ↩︎

-

https://www.infoq.com/news/2020/02/Microsoft-Sustainability/ ↩︎

-

https://www.nginx.com/resources/glossary/load-balancing/#:~:text=A%20load%20balancer%20acts%20as,overworked%2C%20which%20could%20degrade%20performance. ↩︎

-

https://www.ecmweb.com/power-quality-reliability/article/20892715/saving-energy-through-load-balancing-and-scheduling ↩︎

-

https://kubernetes.io/docs/concepts/scheduling-eviction/kube-scheduler/ ↩︎

-

https://devblogs.microsoft.com/sustainable-software/carbon-aware-kubernetes/ ↩︎

-

https://kubernetes.io/docs/tasks/administer-cluster/safely-drain-node/ ↩︎

-

https://kubernetes.io/docs/tasks/administer-cluster/manage-resources/ ↩︎

-

https://kubernetes.io/docs/concepts/workloads/pods/disruptions/ ↩︎

-

https://github.com/kubernetes/community/blob/master/sig-list.md ↩︎